Graphics card memory, or VRAM, has been a hot topic of discussion over the past few years because we're not really getting enough of it. It's crazy to think that 8 years ago, the GeForce GTX 1070 was Nvidia's first mainstream GPU to pack 8GB of VRAM for $450 – that's just shy of $600 in today's dollars when adjusting for inflation.

These days, those same $600 buys you a GeForce RTX 4070 Super, a GPU armed with just 12GB of VRAM. While that is more than 8GB, it is not a significant increase considering the time that has passed between GPU generations.

A bigger issue is seen with parts like the mid-range RTX 4060 Ti, which launched at $400 for the 8GB model. Later on, a 16GB version was introduced at $500, but it sold so poorly that it eventually received an official price cut to $450 due to a lack of demand. Even with the price cut, it still isn't a great value for what it offers.

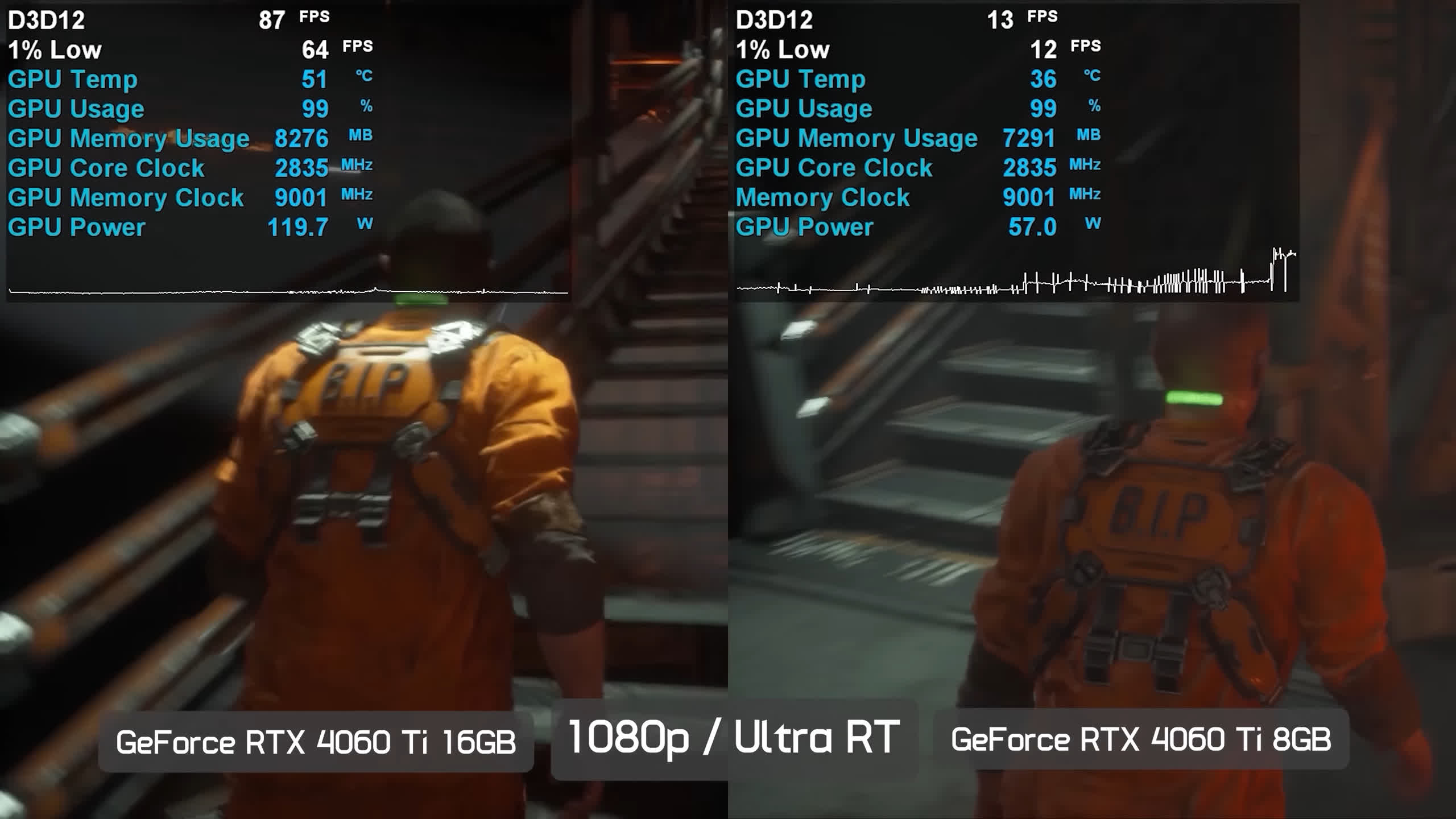

What the 8GB and 16GB versions of the RTX 4060 Ti have clearly demonstrated though, is that for higher-end gaming 8GB of VRAM is now woefully inadequate. The clearest examples were seen when first reviewing the 16GB model. In several instances, the 16GB graphics card performed better, either delivering higher frame rates or a smoother experience.

What Does Running Out of VRAM Look Like?

Testing VRAM capacity can be tricky because not all games behave the same way when the VRAM buffer is exceeded. Games that run into performance-related issues are easy to point to. For example, when playing Horizon Forbidden West and comparing the 8GB and 16GB versions of the RTX 4060 Ti at 1440p using the "very high" preset, clear differences were observed.

In some in-game cutscenes like the one shown above, the 16GB model was up to 35% faster and had fewer frametime spikes, which is more noticeable during gameplay. On average, the 16GB model was 40% faster and offered significantly better frame time performance, resulting in a much smoother and more consistent experience.

Granted, we are talking about sub-60 fps gaming here, but for many, this is still playable, especially for those who prioritize graphical fidelity. Even with DLSS upscaling, the 16GB model is much better, offering higher frame rate performance with better frame time consistency. In our tests, we saw the 16GB model achieving 73 fps on average compared to 49 fps for the 8GB model – that's nearly a 50% improvement explained by having the sufficient VRAM capacity.

That's one of the most obvious examples we can point to where 8GB of VRAM isn't enough to play a game in all of its glory. However, you can still play Horizon Forbidden West using an 8GB graphics card by dialing down the quality preset from 'very high' to 'high'.

On that note, if you're primarily into competitive multiplayer titles and use the kind of graphics settings that allow you to spot enemies more easily while maximizing frame rate, then this article doesn't really apply to you. Playing Call of Duty with basic settings or Fortnite in performance mode uses very little VRAM, making VRAM usage irrelevant for you.

But getting back to visually stunning single-player games or multiplayer titles with the eye candy cranked up, you're not always going to see performance-related issues like in Horizon Forbidden West. Many games manage memory usage differently, automatically dialing down quality settings or removing textures altogether.

Examples of this include Halo Infinite, which removes textures and decreases the level of detail for certain objects. Forspoken had a similar issue where the game looked washed out and muddy with insufficient VRAM. Today, the game manages textures better, but the result is still similar.

Upon release, Forspoken would miss all textures without enough VRAM, resulting in a horrible-looking game. Now, it removes textures for everything not currently in view and tries to load them where they are most obvious. This results in texture pop-in and sometimes texture cycling, where high-quality textures appear and disappear, as we've also observed in Hogwarts Legacy.

The most common issues you're going to see when running out of VRAM include frame rate performance tanking, less consistent frame time performance and/or missing textures.

Having demonstrated these issues in over a dozen games, we wanted to tackle this subject differently by looking at how much VRAM today's games are actually using – not allocating – but rather how much VRAM they want to use, when running a wide range of resolutions and quality settings. This kind of testing takes time as games need to be reset between each quality setting change and played for at least 10 minutes to get an accurate read on memory usage.

That's what we've done for this article, testing a dozen modern titles using the GeForce RTX 4090 which is ideal for this because it packs a 24GB buffer. While games tend to allocate more memory when more is available, we focused on memory usage. The numbers should be close to what is required for a given resolution and quality settings. Let's get into it…

Measuring VRAM Usage

Ratchet & Clank: Rift Apart

Starting with Ratchet & Clank: Rift Apart, this title tends to delete certain textures when running out of VRAM. It can also suffer from performance-related issues, making it difficult to identify insufficient VRAM in this game.

Ideally, the game wants an 8GB frame buffer for medium quality settings at 1080p, with high jumping up to 9.2GB and 'very high' hitting 10GB. It is possible to use the very high setting at 1080p on an 8GB GPU, but you will likely notice some frame time issues, and it's unlikely that all textures will be rendered at full quality.

Enabling ray tracing with the very high preset won't end well, as this uses 11.2GB of VRAM. If you enable frame generation, it will push you over 12GB.

At 1440p, there is a 7% increase in memory usage compared to 1080p, making it difficult to play with an 8GB GPU using very high settings and nearly impossible with ray tracing enabled.

Finally, there is an 8-10% increase in memory usage when jumping to 4K resolution. For a high-end GPU, you really want 16GB of VRAM. The 12GB models will work with the very high preset, but if you want to enable ray tracing or frame generation, you'll ideally want 16GB.

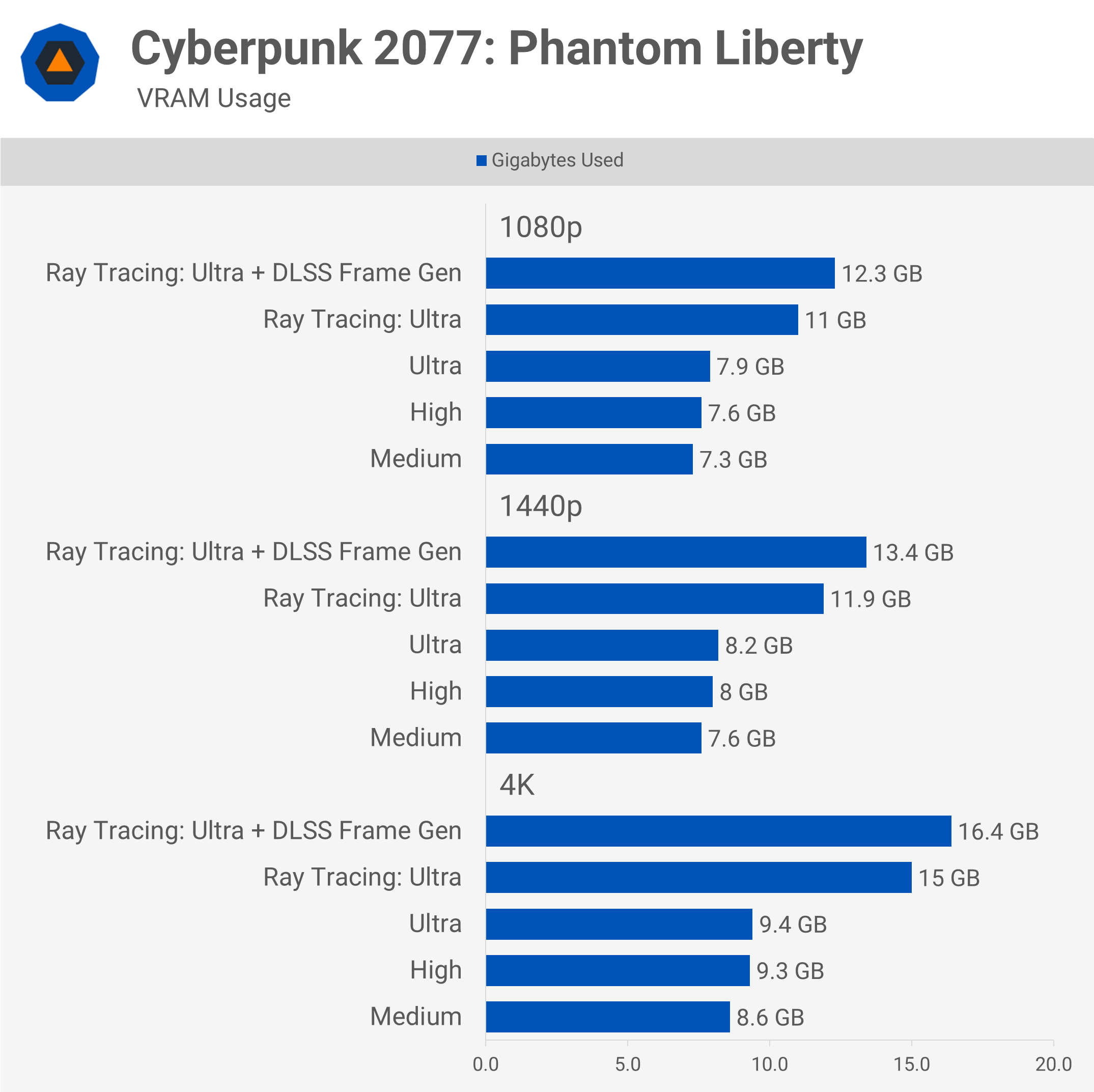

Cyberpunk 2077: Phantom Liberty

Cyberpunk 2077: Phantom Liberty is well-optimized for 8GB graphics cards. Visually, texture quality is probably the biggest weakness of this title, as they tend to be a bit bland. At 1080p, it's possible to run with the 'ultra' preset on an 8GB card without encountering memory-related issues, and the same is true at 1440p. Performance issues will start to appear at 4K, but no 8GB GPUs are powerful enough to play Cyberpunk 2077 at 4K using the 'ultra' preset, making this a non-issue.

Problems start to occur for 8GB graphics cards when enabling ray tracing, which pushes memory usage to 11GB at 1080p and 12GB at 1440p. If you enable frame generation as well, you're looking at 12GB of usage at 1080p, 13.5GB at 1440p, and 16.5GB at 4K.

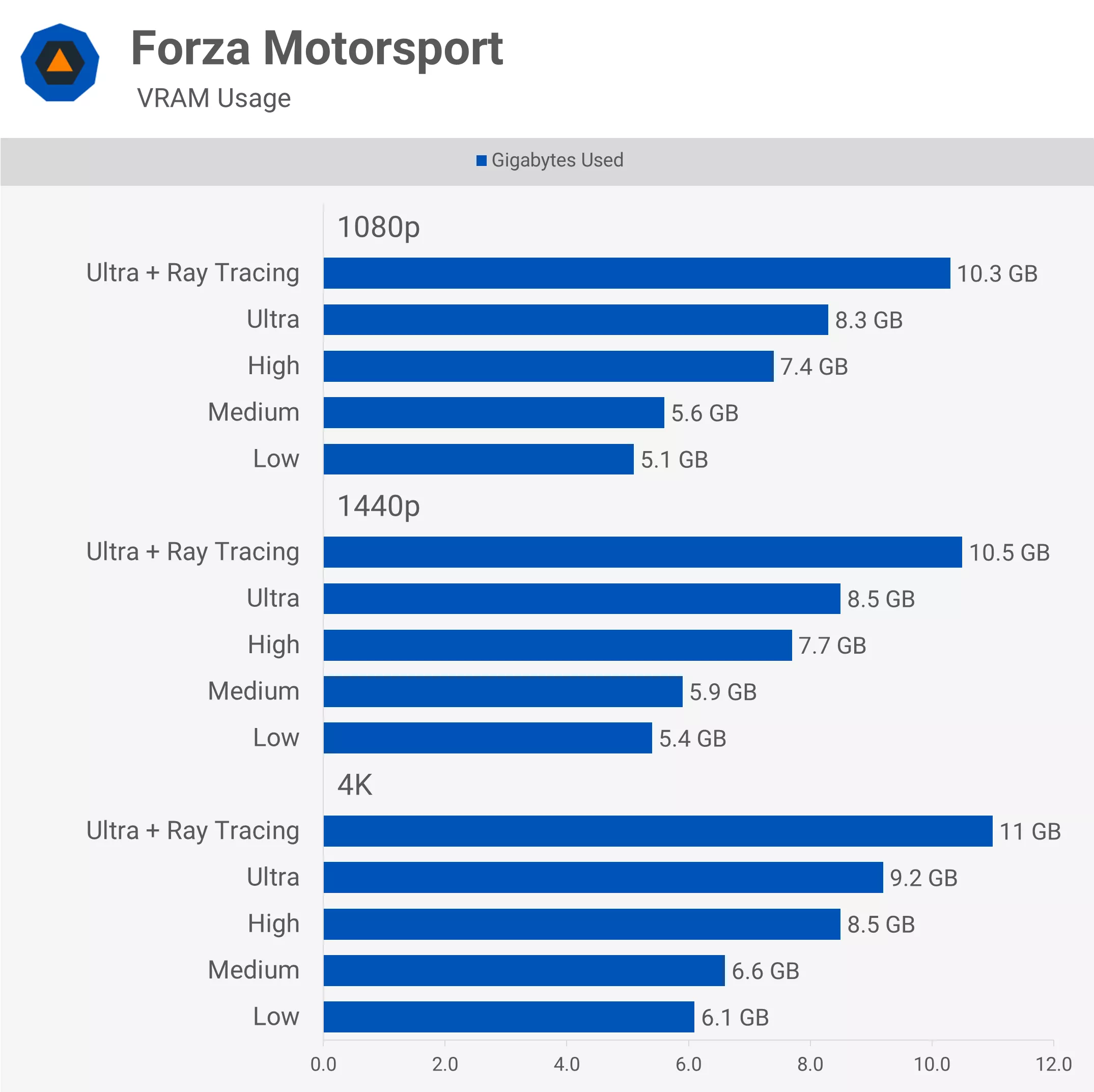

Forza Motorsport

Forza Motorsport will play comfortably on an 8GB graphics card using the 'high' preset, even at 1440p, though 4K might be a stretch. You could potentially get away with the 'ultra' preset at 1080p and 1440p, depending on how the game handles assets. The ultra preset with ray tracing enabled is out of the question, though, as it uses 10.3GB at 1080p and 11GB at 4K, so you'll want a 12GB GPU to get the most out of this title.

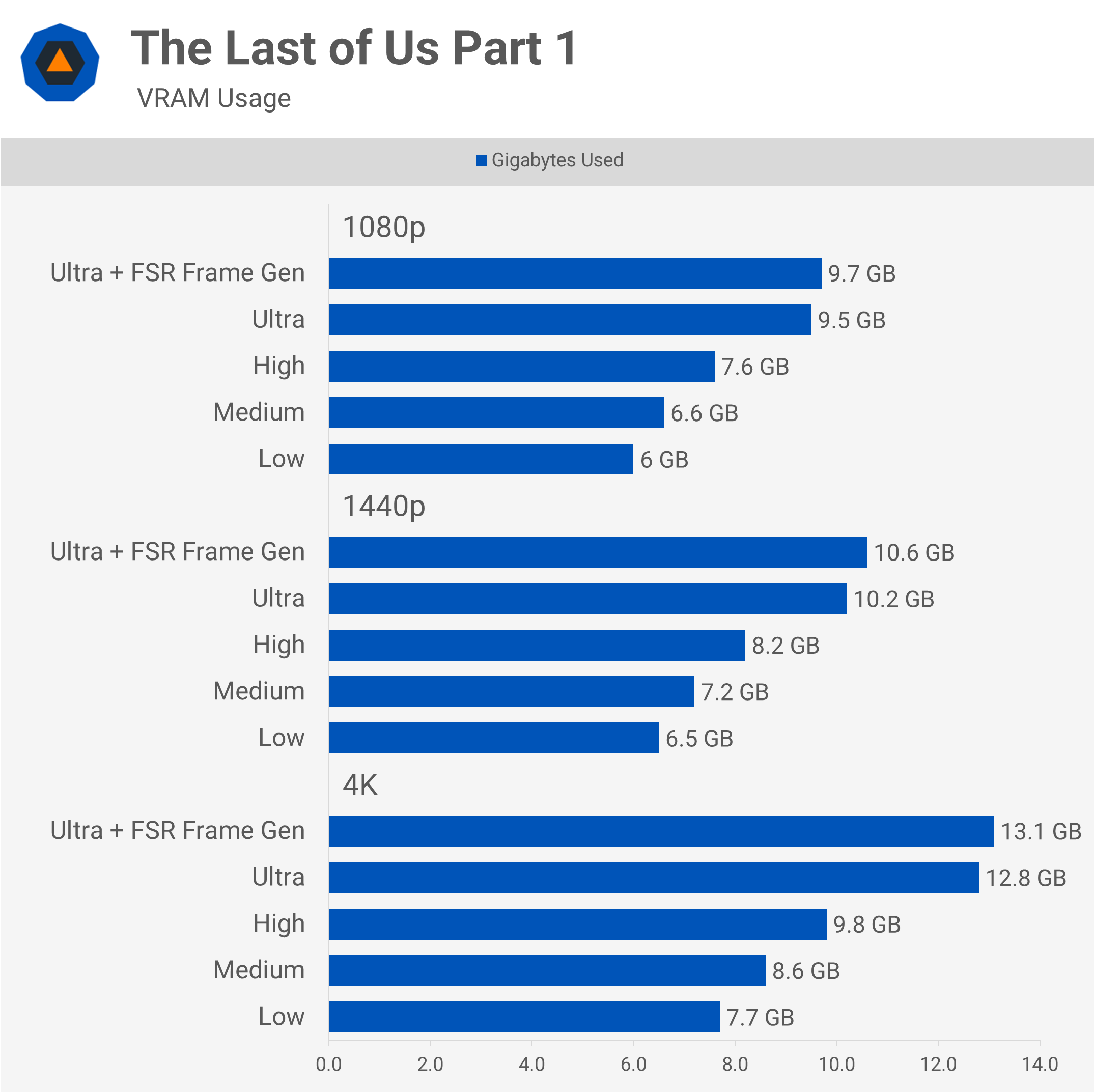

The Last of Us Part 1

The Last of Us Part 1 is thought to have kicked off the VRAM discussion around 8GB cards, but there were signs of trouble well before this title came along. Even after several optimization patches, the game still requires more than 8GB of VRAM at 1080p if you wish to use the 'ultra' preset. The 'high' preset, on the other hand, will work fine and should even be okay at 1440p, though it will be insufficient at 4K.

At 1440p, a 12GB buffer should be plenty, though it might start to become a problem at 4K. This game uses FSR frame generation, which doesn't seem to increase VRAM usage significantly.

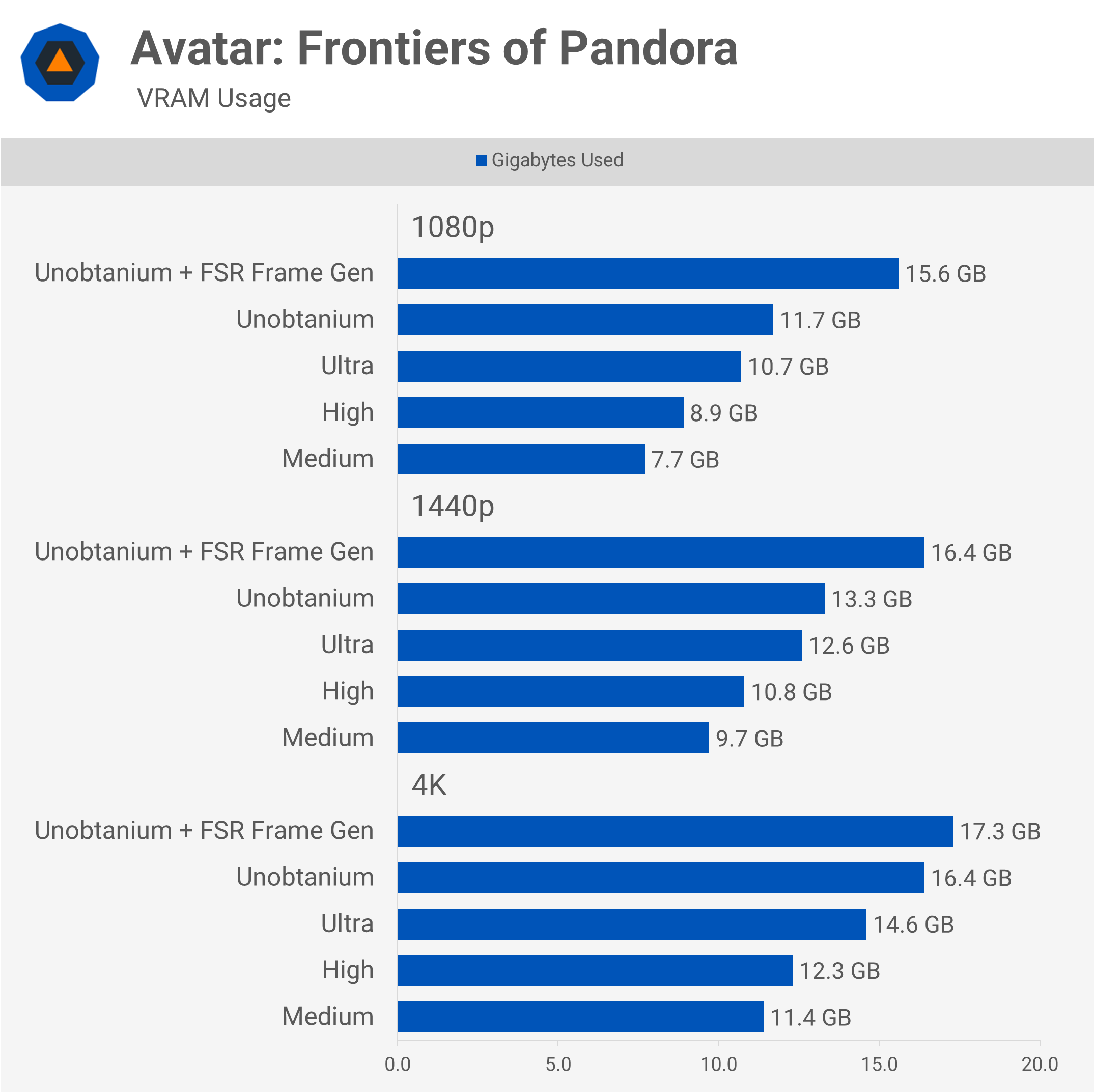

Avatar: Frontiers of Pandora

Moving on to Avatar, this game is very memory-hungry, though unlike some of the other games we've tested, exceeding the VRAM buffer here doesn't result in a huge performance or visual loss. The main issue you'll see is less consistent frame time performance. While that's not ideal, it's not as jarring as in most other titles.

For example, playing using the 'ultra' preset on an 8GB GPU isn't too bad despite the game using 10.7GB at 1080p and 12.6GB at 1440p. It's not flawless, and you will see frame time spikes as the game tries to manage a lack of VRAM. A quick in-game comparison between the 8GB and 16GB versions of the RTX 4060 Ti at 1440p using the ultra preset showed the 16GB model delivering 25% higher 1% lows.

For those gaming at 4K, you will want at least 16GB of VRAM to ensure optimal performance.

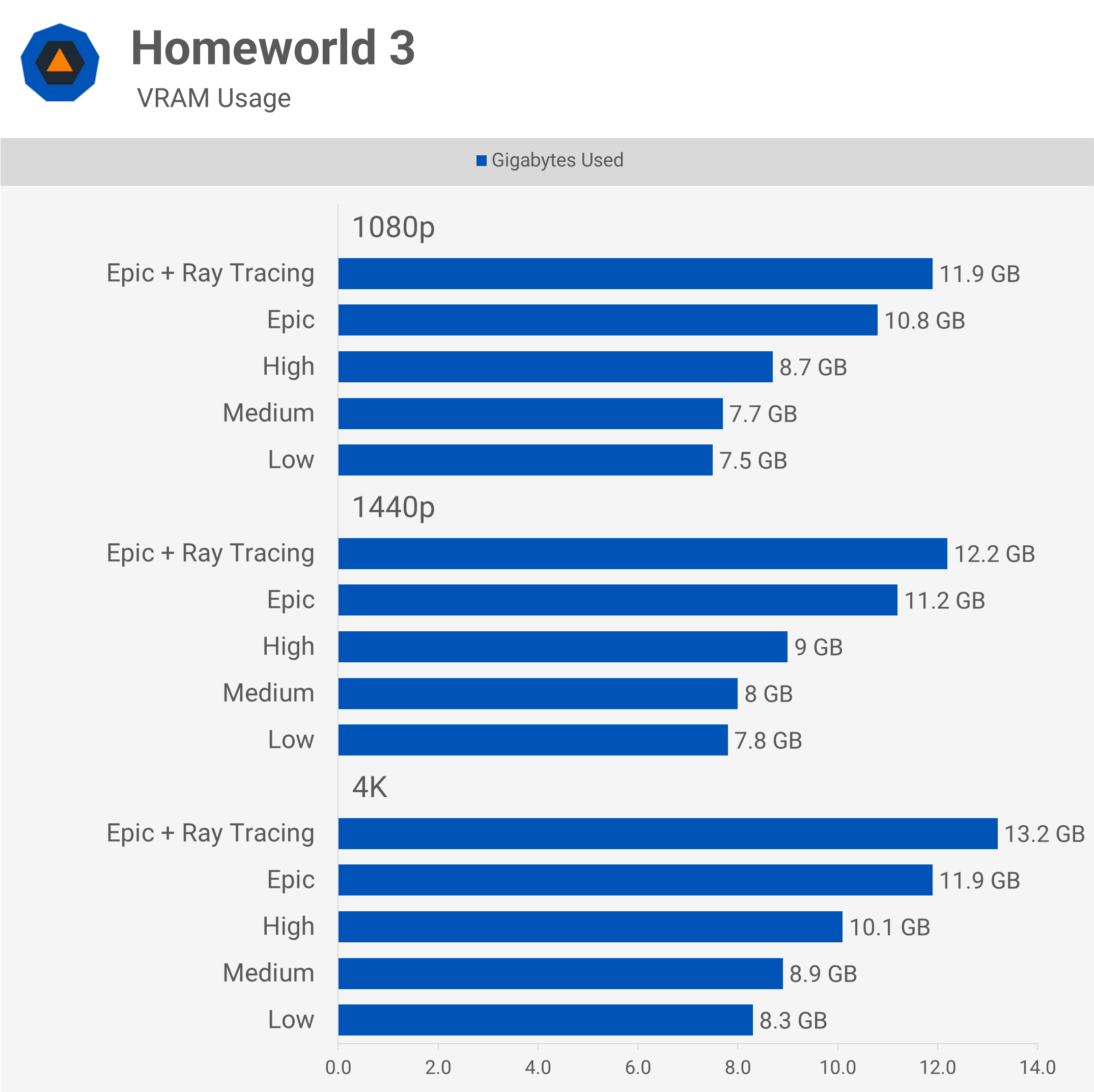

Homeworld 3

Those playing Homeworld 3 with an 8GB graphics card should avoid the 'epic' quality preset and instead stick to 'high' as the maximum setting. Though 'high' does result in noticeable frame time performance, we recommend 'medium' as this will keep you within an 8GB buffer, even at 1440p.

For those wanting to visually max out this title without worrying about a lack of VRAM, at 1080p and 1440p, 12GB will work just fine, but for 4K with ray tracing, we recommend 16GB.

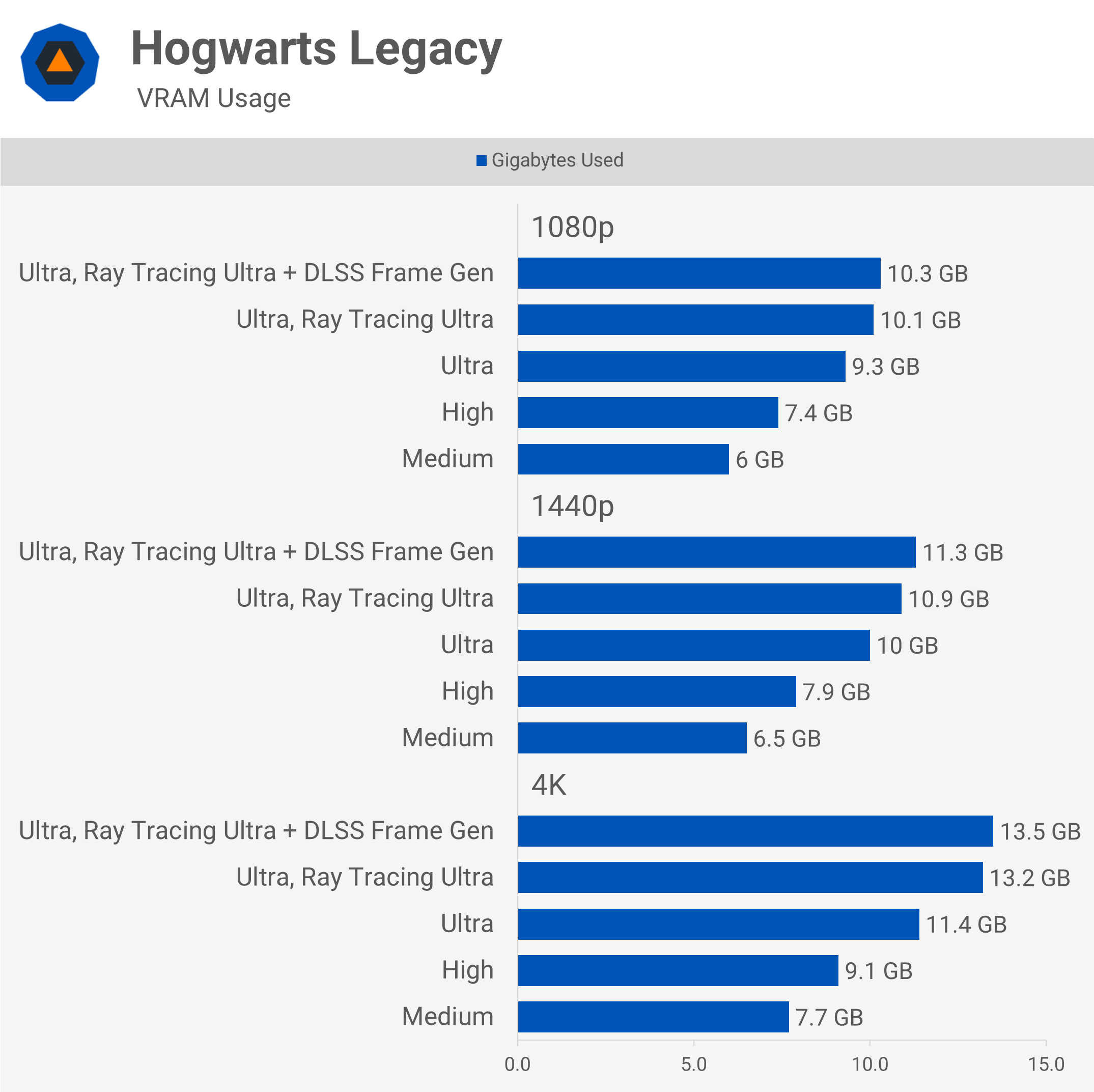

Hogwarts Legacy

We know Hogwarts Legacy is a heavy user of VRAM, and without enough, all kinds of undesirable things happen. Basically, you're looking at fps performance tanking, horrible frame time stuttering, and aggressive texture pop-in, or just no textures at all.

Realistically, 8GB graphics cards are limited to the 'high' preset without any ray tracing at 1080p and 1440p. Enabling the 'ultra' preset will result in either missing textures or poor frame time performance.

12GB graphics cards should be fine at 1080p and 1440p, regardless of the quality settings used. However, this won't be the case at 4K, where we recommend having at least 16GB.

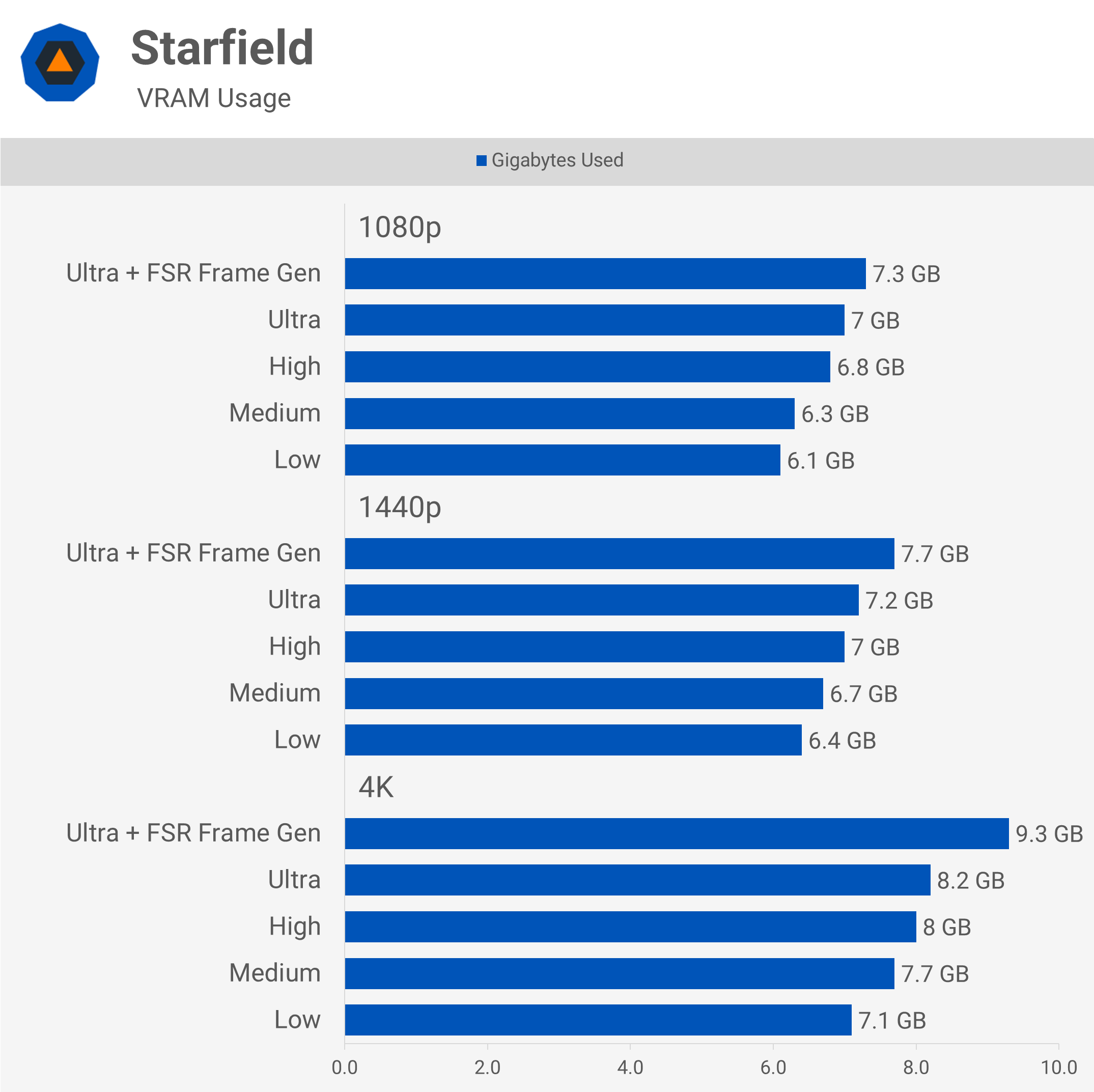

Starfield

Starfield is probably the least visually impressive triple-A title released last year. Visually, it's a bit of a hot mess, but at least it's not memory-hungry, using very little VRAM at 1080p and 1440p. As a result, 8GB of VRAM is plenty here. It's not until you get up to 4K with ultra settings that you might need 12GB.

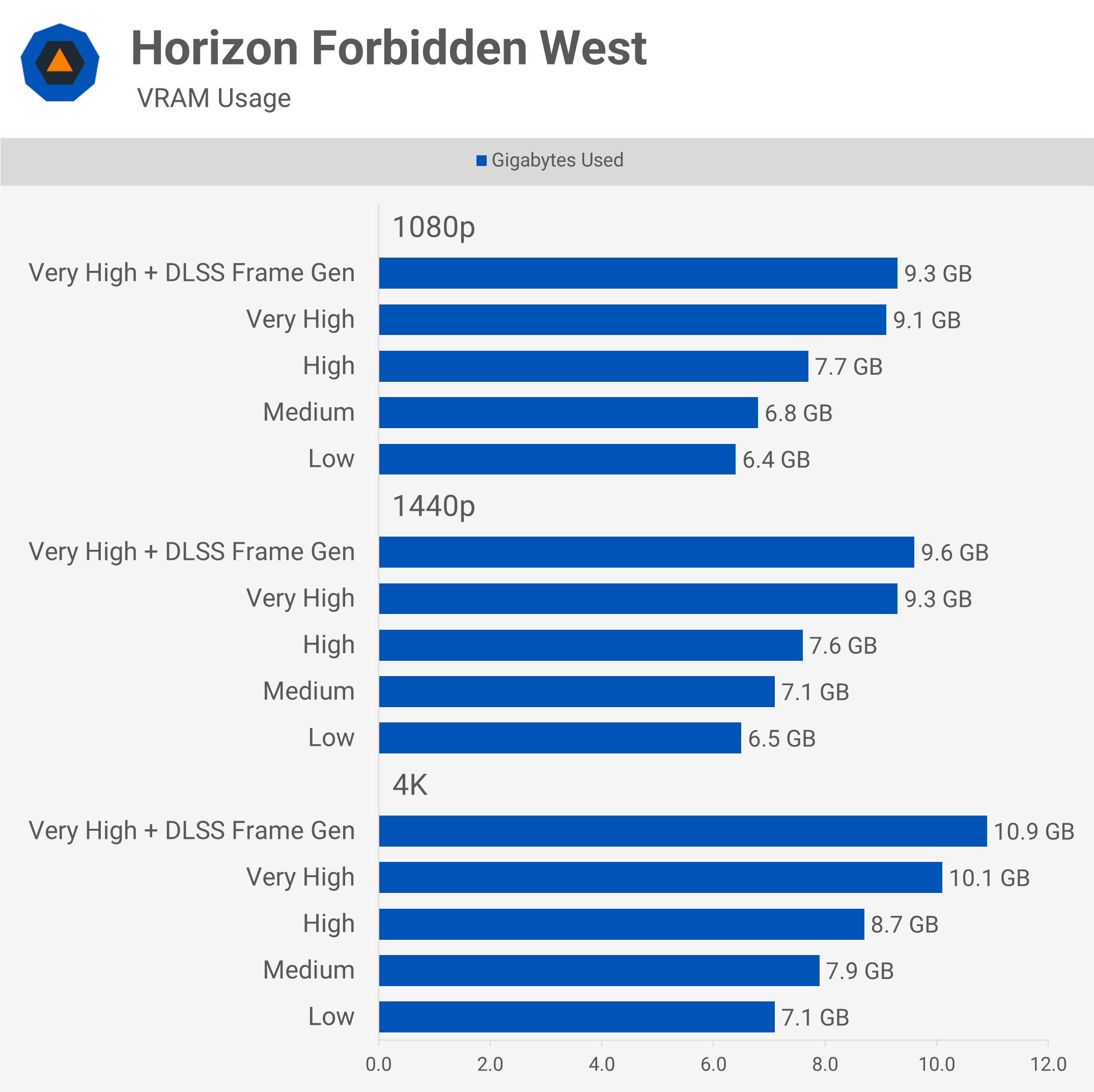

Horizon: Forbidden West

As we've already seen, Horizon Forbidden West requires more than 8GB of VRAM if you wish to use the 'very high' preset. In fact, VRAM usage at 1080p and 1440p is almost identical. Dialing down to the 'high' preset will work, so at least there's that. For this title, 12GB is ample, even for those gaming at 4K.

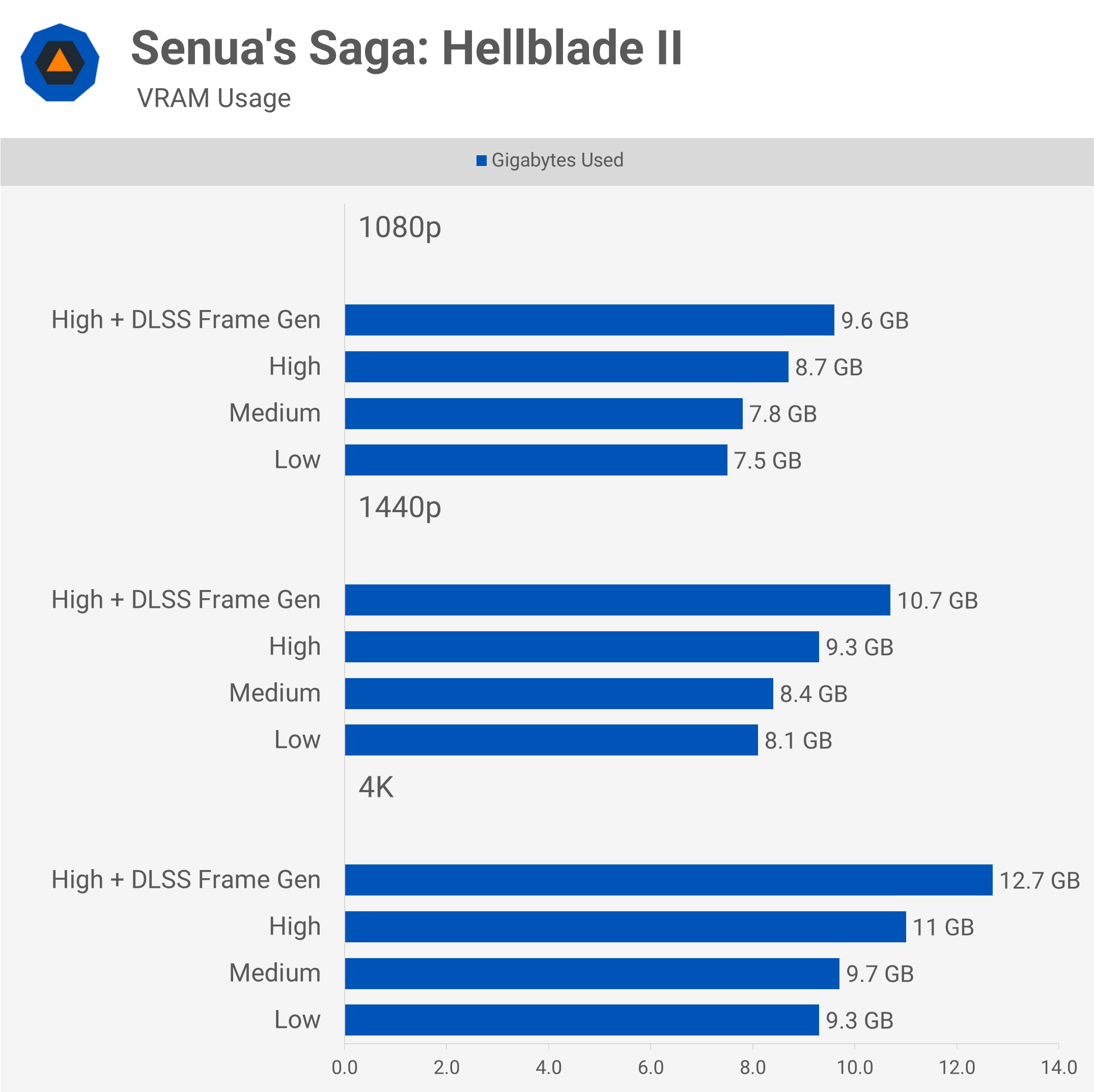

Senua's Saga: Hellblade 2

Senua's Saga: Hellblade II will use more than 8GB of VRAM at 1080p with the 'high' preset and well over that if you want to enable frame generation. The situation worsens for 8GB graphics cards at 1440p, where you will want at least 12GB. At 4K, there is evidence that 12GB might not be enough, and if it is, it's just barely enough, as seen when enabling DLSS frame generation with the 'high' preset.

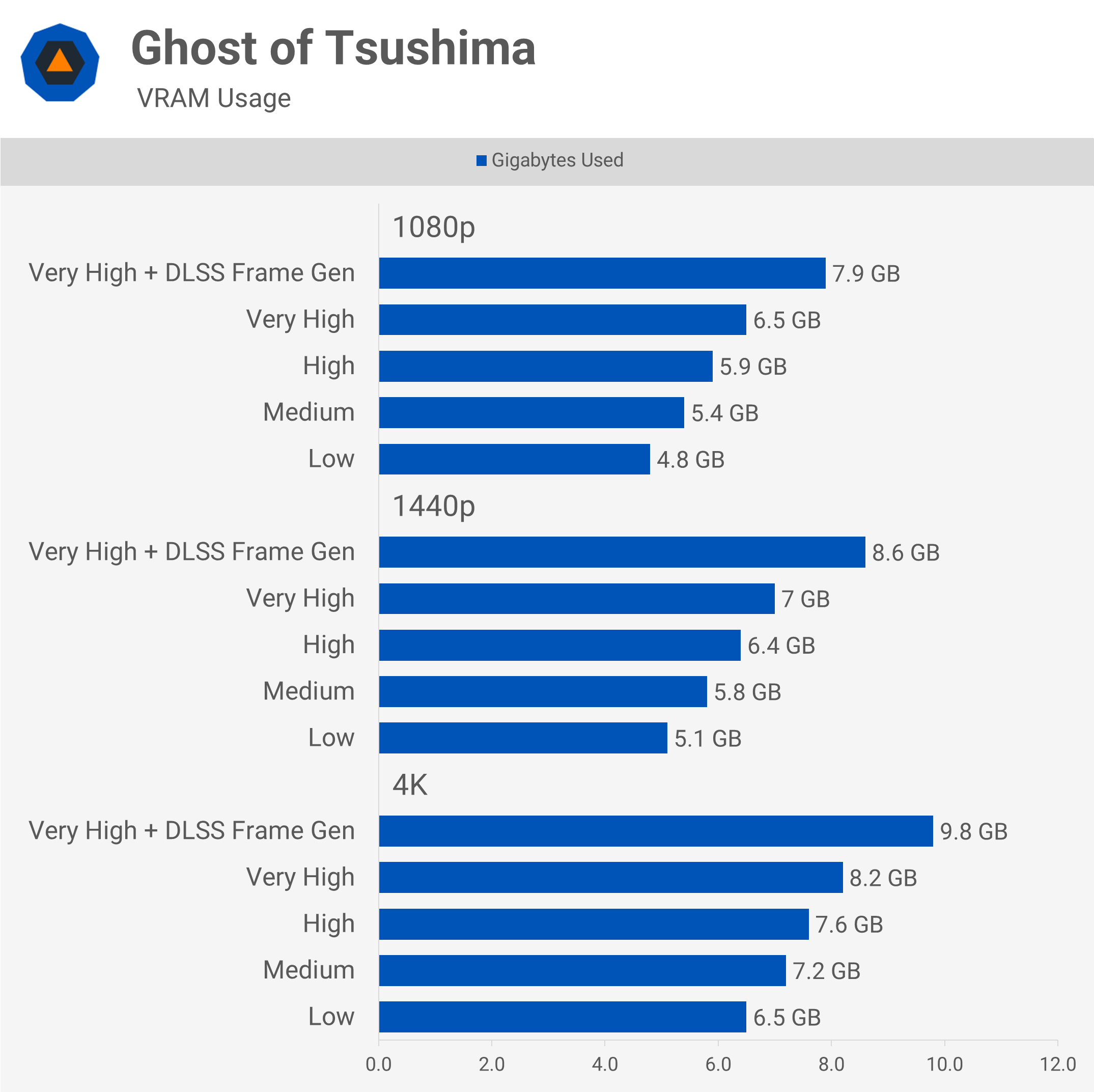

Ghost of Tsushima

Ghost of Tsushima will play just fine on an 8GB graphics card at 1080p, even with the very high preset, and the same is true right up to 4K. That said, if you enable DLSS frame generation, you might see some issues at 1440p and almost certainly at 4K, as the game pushes well past 8GB, though 12GB will be more than enough.

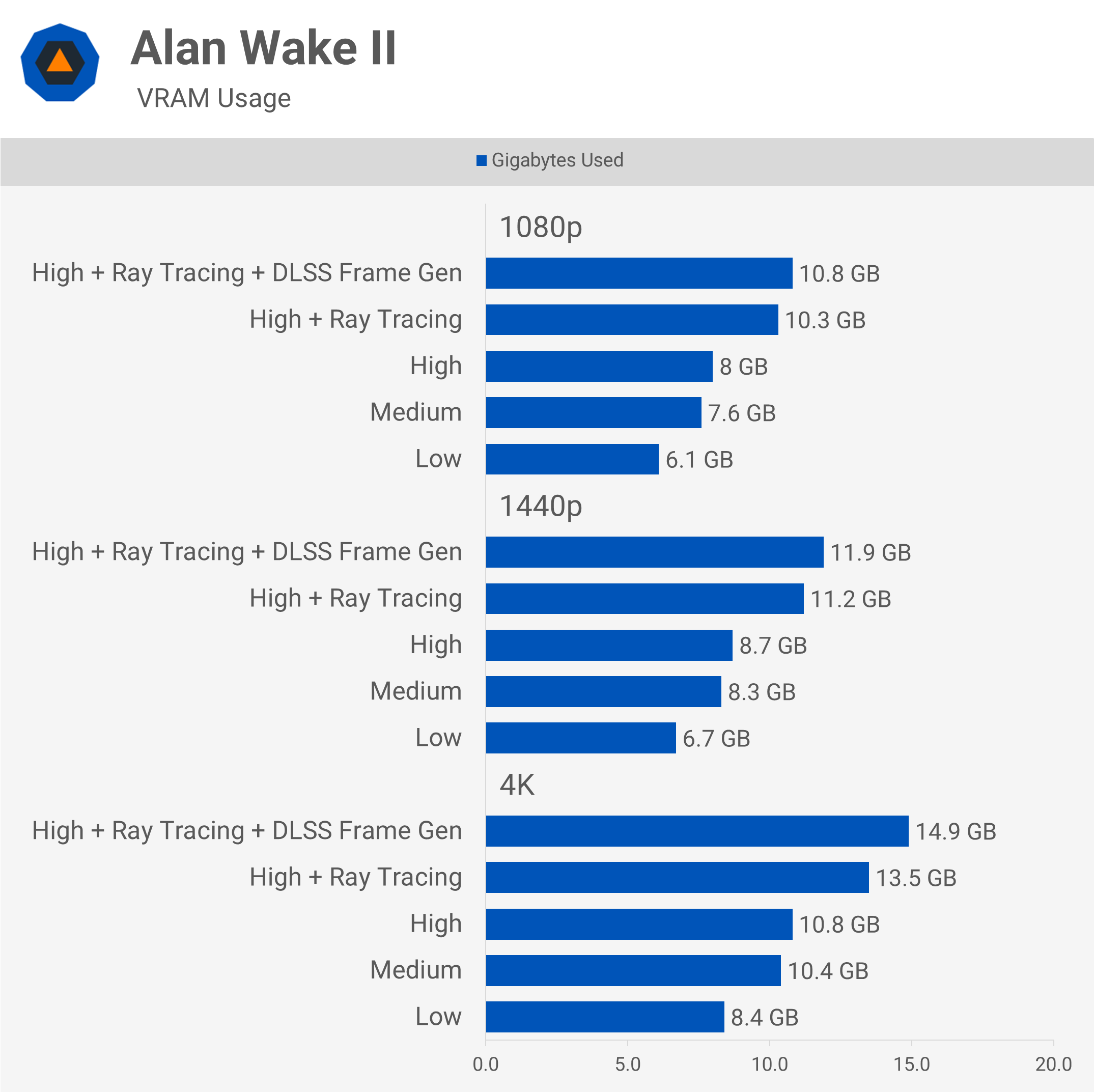

Alan Wake 2

Alan Wake II is a visually impressive game that requires a good amount of VRAM. At 1080p, an 8GB GPU will suffice for the medium and high presets. However, enabling ray tracing will be problematic as this requires over 10GB, so a 12GB VRAM buffer is necessary here.

Jumping up to 1440p, it is possible to get away with 8GB using the high preset, though occasional frame stutters may be observed. Again, ray tracing is completely out of the question when using the high preset and will require a 12GB model. For those wanting to play Alan Wake II at 4K, you will need 12GB of VRAM for most settings, and really, 16GB is necessary for ray tracing.

What We Learned

So there you have it: more and more games are using over 8GB of VRAM, particularly at 1440p with high-quality settings. As we've known for a while now, ray tracing does increase VRAM usage substantially, so if you are interested in RT moving forward, we recommend a minimum of 16GB.

For those of you gaming at 1080p, 8GB is often right on the edge for the latest titles, and in some cases, high or ultra settings will push you over. At 1440p, we've found 8GB to be more suitable for medium presets, but if you want to enable ray tracing, it's likely going to be a problem.

With the exception of entry-level options, you shouldn't be buying 8GB graphics cards anymore. 12GB is now the bare minimum, and 16GB is the ideal target.

The great thing about VRAM usage is that you can dial down things like textures and still receive a perfectly playable experience, so there is some scalability. This means if you're buying more entry-level products, compromising on texture quality is much less of an issue. This is why products like the Radeon RX 6600 have been perfectly acceptable, at least when dropping down to $200 or less. For that kind of money, the compromise on VRAM is somewhat justifiable.

That said, paying $400 for an 8GB product is a bad joke and is what has made the 8GB version of the RTX 4060 Ti so offensive to potential buyers. Released midway through 2023, it's hard to believe Nvidia was still asking $400 for 8GB of VRAM given the current market conditions.

Realistically, gamers should have been demanding back in 2023 that anything over $300 be armed with at least 12GB of VRAM, and anything over $400 should have had 16GB. AI boom or not, they still want to sell some GeForce GPUs, and the same applies to AMD.

Regardless of what did or didn't happen, with the exception of entry-level options, you shouldn't be buying 8GB graphics cards anymore. 12GB is now the bare minimum, and 16GB is the ideal target.

Rumor has it that the next generation of GeForce GPUs won't see an increase in VRAM capacity, which we find hard to believe given how limited the Ada Lovelace generation was. However, we also wouldn't put it past Nvidia, especially given how many PC enthusiasts on places like Reddit and X seem to defend mid-range 8GB graphics cards.

Anyway, that's our recommendation: buy a graphics card with at least 16GB of VRAM for peace of mind, knowing all the textures will be rendered the way they're meant to be and that VRAM won't be responsible for any frametime issues.