By Jason Snell

July 5, 2024 2:16 PM PT

I’ll have my AI email your AI

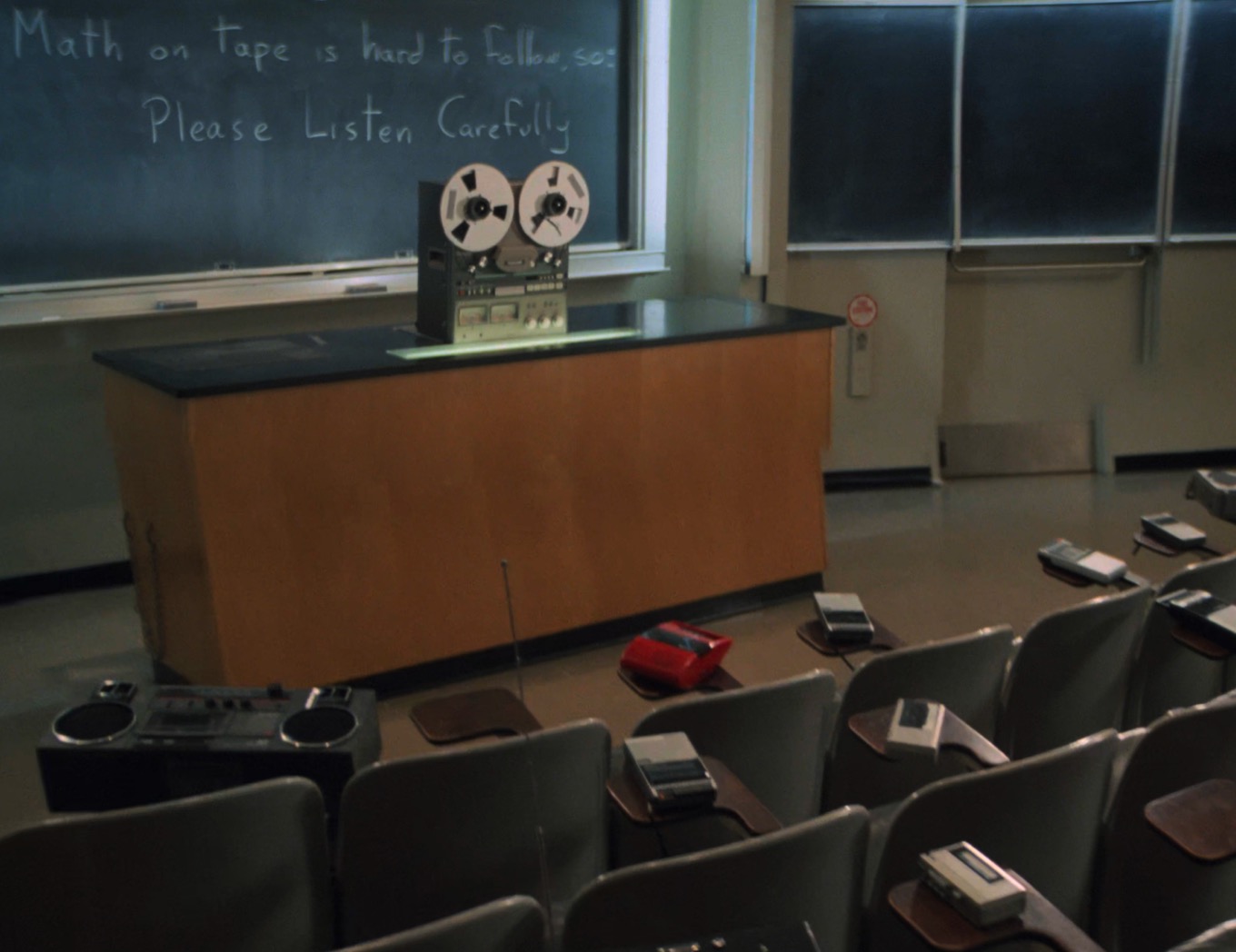

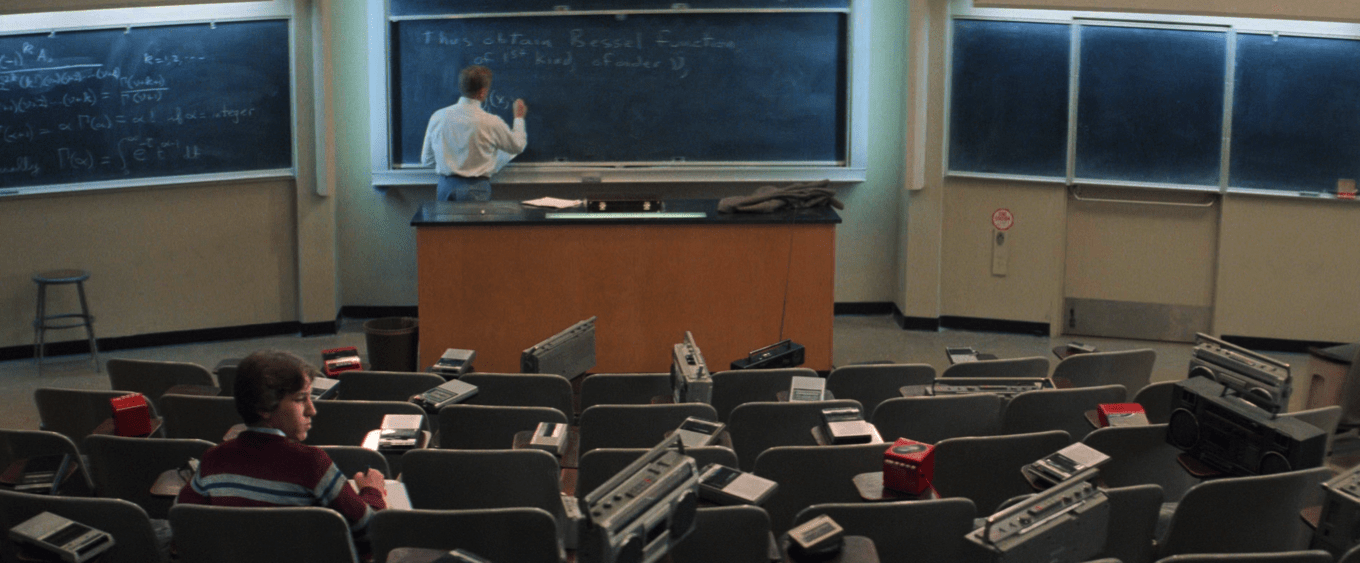

There’s a joke in one of my favorite movies, “Real Genius,” which feels directly applicable to a lot of AI discussions we’re having today. (It’s an ’80s movie, so it’s not a scene—it’s a montage, set to “I’m Falling” by The Comsat Angels.)

In it, our protagonist Mitch attends a normal math lecture, but over the course of the montage most of the class is replaced by tape recorders of various sizes.1 In the final shot, Mitch enters the lecture hall to discover that a large reel-to-reel tape player has replaced the professor himself. It’s just one tape recording being played into all the other tape recorders.

One of the announced features for Apple Intelligence, Smart Reply, will offer quick ways to respond to direct queries in email, asking you simple questions (“Do you like me? Check yes or no.”) and drafting a reply for you.

Apple is hardly the first company to suggest that in the future, your phone will write your emails for you. Gmail’s Smart Compose has been doing it for several years, and Apple’s been offering its own version of multi-word autocomplete for almost a year.

But with this latest round of AI announcements, once again, I’ve heard a lot of people making jokes about how, pretty soon, your AI will email my AI, and humans will never need to be involved anymore! It’s usually meant as absurdity, but I think there might be more to it than that.

Suppose our AIs end up emailing each other endlessly, striking up meaningless conversations and having their own inner lives. In that case, that might make for an interesting science fiction story, but I’m not sure it would really matter to us as humans. Think of it this way: email is just a communication pathway. It was built for humans to talk to each other, but for years now, we’ve received automated emails, newsletters, spam, and the rest.

If you know much about tech, you’ve heard of APIs, or Application Programming Interfaces. APIs are, at their most abstract level, an agreed-upon method for software to use or communicate with other software. APIs are in the cloud, on the web, on our devices, everywhere. So why not in our email messages, too?

I realize that it’s absurd to consider that a free-form email message would ever be better than a programmed API, but email has a flexibility that other APIs don’t. Emails can be about literally anything. And a lot of times, APIs are just not well used because the people who would use them are lazy, busy, uninterested, or don’t know they exist.

Let’s say you need to find a common meeting time for you and four other people. Are there internet calendar APIs for this? Yes! Are there calendar apps that feature built-in support this sort of scheduling? Yes! Are there literally web apps that will do this work for you? Yes! (I use StrawPoll, myself.) And yet, I’d bet that most people just… send an email to everyone asking them if they can make a certain time and try again until they get it right. It’s not efficient, but it is convenient.

Now imagine that same scenario, but everyone is using an AI system that’s reading email and has access to each user’s calendar. The end result might be the same as using an existing API or web app, but instead email messages among AIs are sorting it out. Maybe some AIs know exactly when their person is available; others might need to ask. But instead of the onus being on the users to interface with other systems and bring it all together, the AIs handle most of it and the user just chimes in when it’s necessary.

I don’t think that’s an absurd scenario. (And yeah, if the AIs are particularly intelligent, maybe they’ll use an existing calendar service to solve the problem up front.) It’s the equivalent of each of those people having their own human assistant setting up the meeting—except none of them likely have the budget to hire a personal assistant.

In fact, where AI assistants really run into trouble is not when they’re talking to other AIs, but when they’re talking to human beings. Remember when Google showed off its service that pretended to be a human and called real people to verify Google Maps data or make reservations? That’s what I really dread: being battered by emails and texts and phone calls from AIs operating for people and organizations who want my attention but aren’t willing to give me any of their own.

As long as I, a human, don’t have to read a pile of AI-to-AI email communications, I don’t mind if they have them. The protocol doesn’t really matter—use iMessage or RCS, for all I care—so long as the job gets done and I’m not left to clean up the mess. Keep me out of it, other than answering questions or making my own requests.

Email and text messages may be a stupid way to build an interconnected web of AI software systems, but history has frequently shown us that sometimes the easiest solution is the one that’s available, not the one that’s the most elegant.2

- The scene is meant to satirize the apparent mid-80s proclivity of college students to tape their lectures, or to skip their lectures and have a friend tape them? I dunno. Three years after “Real Genius” came out, I went to college and discovered that there was an official student organization that would sell you the complete lecture notes of any major class. ↩

- My university’s Lecture Notes service was eventually replaced by—you guessed it—AI. ↩