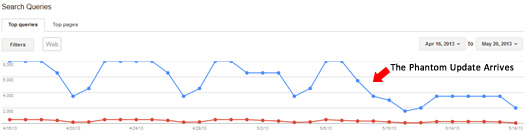

In early May there was a lot of chatter in the webmaster forums about a major Google update. Google wouldn’t confirm that it occurred (big shock), but the level of chatter was significant. Not long after that, Matt Cutts announced that Penguin 2.0 would be rolling out within the next few weeks, and that it would be big. All signs point to a major update that will be larger and nastier than Penguin 1.0. Now, I’ve done a lot of Penguin work since April 24, 2012 when Penguin 1.0 rolled out, and I can’t imagine a larger, nastier Penguin. But that’s exactly what’s coming.

So what was this “phantom update” that occurred on May 8th? Was it a Panda update, some other type of targeted action, or was it actually Penguin 2.0 being tested in the wild? I’m a firm believer that Google rolls out major updates to a subset of websites prior to the full rollout in order to gauge its impact. If Penguin 2.0 is rolling out soon, then what we just saw (the phantom update) could very well be our new, cute black and white friend. I guess we’ll all know soon enough.

*Update: Penguin 2.0 Launched on 5/22*

Penguin 2.0 launched on May 22nd and I have analyzed a number of sites hit by latest algorithm update. I published a post containing my findings, based on analyzing 13 sites hit by Penguin 2.0. You should check out that post to learn more about our new, icy friend.

The First Emails From Webmasters Arrived on May 9th

The first webmasters to contact me about this phantom update were confused, nervous and seeking help and guidance. They noticed a significant drop in Google organic search traffic starting on the prior day (May 8th), and wanted to find out what was going on. Four websites in particular saw large drops in traffic and were worried that they got hit by another serious Google algorithm update.

So, I began to heavily analyze the sites in question to determine the keywords that dropped, the content that was hit, the possible causes of the drop, etc. I wanted to know more about this phantom update, especially if this could be the beginnings of Penguin 2.0.

Phantom Update Findings

After digging into each of the four sites since 5/9, I have some information that I would like to share about what this phantom update was targeting. Since Penguin 1.0 heavily targeted unnatural links, I wanted to know if this update followed the same pattern, or if there were other webspam factors involved (and being targeted). Now, my analysis covers four sites, and not hundreds (yet), but there were some interesting findings that stood out.

Below, I’ll cover five findings based on analyzing websites hit by Google’s Phantom Update on May 8th. And as Penguin 2.0 officially rolls out, keep an eye on my blog. I’ll be covering Penguin 2.0 findings in future blog posts, just like I did with Penguin 1.0.

Google Phantom Update Findings:

- Link Source vs. Destination

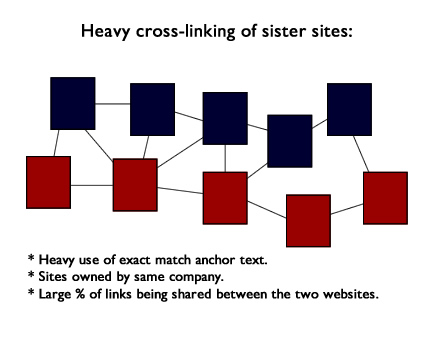

One of the websites I analyzed was upstream unnatural links-wise. It definitely isn’t a spammy website, directory, on anything like that, but the site was linking to many other websites using followed links (when a number of those links should have been nofollowed). Also, the site can be considered an authority in its space, but it was violating Google’s guidelines with its external linking situation.I’ve analyzed over 170 sites hit by Penguin since April 24, 2012, and this site didn’t fit the typical Penguin profile exactly… There were additional factors, some of which I’ll cover below.But, being an upstream source of unnatural links was a big factor why this site got hit (in my opinion). So, if this is a pre-Penguin 2.0 rollout, I’m wondering how many other sites with authority will get hit when the full rollout occurs. I’m sure there are many site owners that believe they can’t get hit, since they think they are in great shape as an authority… I can tell you this site got hit hard. - Cross-Linking (Network-like)

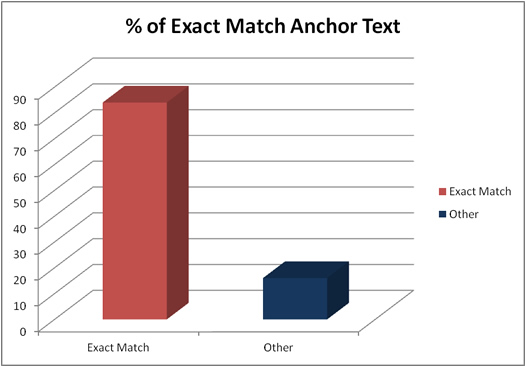

Two of the sites that were hit were cross-linking heavily to each other (as sister websites). And to make matters worse, they were heavily using exact match anchor text links. Checking the link profiles of both sites, the sister sites accounted for a significant amount of links to each other… and again, they were using exact match anchor text for many of those links. It’s worth noting that I’ve helped other companies (before this update) with a similar situation. If you own a bunch of domains, and you are cross linking the heck out of them using exact match anchor text, you should absolutely revisit your strategy. This phantom update confirms my point.

- Risky Link Profiles (historically as well as current)

This was more traditional Penguin 1.0, but each of the four sites had risky links. Now, one site in particular had a relatively strong link profile, has been around for a long time, and had built up a lot of links over time. But, there were pockets of serious link problems. Spammy directories, comment spam, and spun articles were driving many unnatural links to the site. But again, this wasn’t overwhelming percentage-wise. I’ve analyzed some sites hit by Penguin 1.0 that had 80-90% spammy links.This wasn’t the case with the site mentioned above. Two of the sites I analyzed had more spammy links. Their situation looked more Penguin 1.0-like. And they got hit hard. There were many spammy directories linking to the sites using exact match anchor text, comment spam was a big problem, etc. And drilling into their historic link profile, there were many more that had been deleted already. So, their link profiles had “unnatural link baggage”. And I already mentioned the cross-linking situation earlier (with two sites). So yes, links seemed to have a lot to do with this phantom update (at least based on what I’ve seen).

- Scraping Content

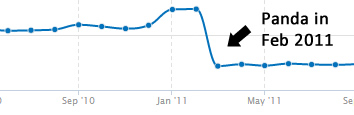

To make matters more complex, two of the sites were also scraping some content to help flesh out pages on the site. This wasn’t a huge percentage of content across each of the two sites mentioned, but definitely was big enough of a problem that it stood out during my analysis. The other two sites didn’t seem to have this problem at all. Scraping-wise, one site was providing excerpts from destination webpages, and then linking to those pages if users wanted more information (this was happening across many pages). The other site had included larger portions of text from the destination page without linking to it (more traditional scraping). - Already Hit by Panda

OK, this was interesting… All four sites I analyzed had been hit by at least one Panda update in the past. Two were hit by the first rollout (Feb 2011), one was hit in July of 2012, and the other in September 2012. Clearly, Google had a problem at one point with the content on these sites. So, how does that factor into the phantom update or Penguin 2.0? I’m not sure, but it was very interesting to see all four had Panda baggage.So, does your Google baggage hurt you down the line, and does the combination of unnatural links and content spam exponentially hurt your site with this update? I’ll need to analyze more sites before I can say with confidence, but it’s worth noting.

Reminder: Algorithmic Updates = Tough Stuff

By now, you might notice something important… how hard analyzing updates like this can be for SEOs (and trying to pinpoint the root cause). Was it unnatural links that got these sites hit by the phantom update, or was it the content piece? Or, was it the combination of both? If this is Penguin 2.0, does it score a site based on a number of webspam tactics? Based on what I’ve heard and read about Penguin 2.0, this very well could be the case. Again, more data will hopefully lead to a clearer view of what was targeted.

The Big Question – Was this Pre-Penguin 2.0 or something else?

Based on my analysis of sites hit by the phantom update, I’m wondering if we are looking at a two-headed monster. Maybe some combination of Panda and Penguin that Google is calling Penguin 2.0? If that’s the case, it could be disastrous for many webmasters. And maybe that’s why Matt Cutts is saying this is going to be larger than 1.0 (and why he even shot a video about what’s coming).

Based on what I found, links seemed to definitely be an issue, but there were content issues and earlier Panda hits too. There were several factors at play between the four sites I analyzed, and it’s hard to tell what exactly triggered each hit. The only good news for webmasters out there is that none of the four seemed like collateral damage. Each had its own issues to deal with webspam-wise.

Stay Tuned – There’s an Icy Update Approaching

That’s all I have for now. If you have any questions, feel free to include them in the comments below. And definitely keep an eye on my blog as Penguin 2.0 rolls out. As I mentioned above, I’ll be heavily analyzing websites hit the new and nastier Penguin. Good luck.

GG

it hit in Canada around a week or so later than May 9 :-)

Good to know Andy. If this was Penguin 2.0 being tested, I feel as if there’s going to be a lot of damage when it fully rolls out. The only good news it that none of the four sites I analyzed were collateral damage. They all did have webspam issues…

Do you think that the sites that were hit around May 9 will be further damaged once penguin rolls?

Great question. If this was Penguin 2.0, then I doubt the sites would get hit worse. They already took a beating. You never know, though. :)

Thanks Glenn. I worry about keyword stuffing in regard to this update and future updates. I have an ecommerce site with about 1000 products. About 80% of my products are all the same thing, for example widgets. Is it considered keyword stuffing to use “widget” in every product title if the entire category refers to widgets? Whenever I utilize keyword density tools, it shows my page is way over optimized due to the term “widget.” I’ve heard to remove “widget” from every time and perhaps just name one, “blue” or one “red” instead of “blue widget” or “red widget.” Hope that makes sense and would love to hear your thoughts.

You should email me some URLs. Hard to tell without taking a look. Thanks.

I will now… Thanks! :)

I have looked at about 15 sites. About 6 of these sites have been previously hit by Panda. Every one of those took an significant shift (1 up, 5 down) but not at the levels of a Panda hit. Interestingly, the 1 that went up, also had a Penguin hit (none of the others).

Of the about 9 sites that had never been hit by Panda – no impact. So to me, it seems like a Panda algo adjustment or data recalc.

Have you seen any correlation to previous Panda hits.

Thanks for your input Mark. Each of the four site I analyzed were hit by Panda at a previous time, so it’s possible that content played a roll in the phantom update. But the link situation was a serious issue, so I still believe that had a roll.

Based on Penguin 2.0 officially rolling out last night, I feel that the phantom update very well could have been P2.0 tested in the wild. I’m analyzing sites hit by Penguin 2.0 now and will write follow-up posts with my findings. Make sure you subscribe to my feed so you can know when those posts are published (definitely soon).

Glenn, looking forward to hearing your follow up findings.

I’m working on a business that operates a single website across 11 countries using unique Top Level Domains for each country. 5 of them were hit on May 8th and saw around a 33% drop in SEO traffic from 140,000 visits to 95,000 SEO visits per day on our primary .com website.

The sites have been hit previously by Panda twice but were able to recover both times. The reality is there are many things that need to be addressed from an SEO standpoint, I wish there was a little more insight into what specifically happened on May 8th so we can prioritize optimizations accordingly.

Thanks for your comment Alex, and I’m sorry to hear about your websites. The May 8th update was very interesting for sure.

The 8th is hard to pin down. Seems like both content and links were part of that update… but hard to tell exactly. Also, I’ll be writing a post soon covering my Penguin 2.0 findings. You should definitely check that out once it’s published.

If you want to send me an email, I’d be interested in quickly checking out your domains. You can email me via my contact page on this site.

Did Matt Cutts say it wasn’t Penguin 2.0 though? Maybe they’re hiding something.

Penguin 2.0 is officially rolling out within the next few weeks. That’s confirmed. The phantom update was not confirmed and that’s what I’ve been analyzing. It wouldn’t shock me at all to find out this was Penguin 2.0 being tested.

Hmm..so does this mean a Gawker Media type set-up will get hit? It’s pretty ridiculous if you can’t link to your own properties or have to no-follow to make Google happy – that’s a tad too much control over what a webmaster is doing with their site if you ask me. I know it’s an attempt to go after PBN but it hit folk with legitimate media set-ups.

You can link to your own sites, but do it in moderation (and not via thousands of exact match anchor text links). There’s a difference. :) If you tip the scale in the wrong direction, you can definitely get hit.

Okay. I get that…the anchor text and thousands of links.

Glenn – Reading this article a bit late, but wondering how this relates to subdomains? I have extensive exact match links between subdomains, because they’re all actually working off of the same database, and the subdomains are really just categories. We’ve been hit hard by this update and some previous Panda updates. In Webmaster tools, links from the subdomains to the main domain (www) show as internal links. However on the subdomains, links from other subdomains show as external links. To me this seems like a bug in Google’s implementation of how they say they treat subdomains, and I’m worried this is a big cause of our issues.

I would have to see the exact setup Philip, but I would be very careful with heavily using exact match anchor text links between subdomains. I’ve seen heavy cross-linking of company-owned domains (and subdomains) cause issues in the past with Panda.

I’ve helped some clients refine their internal linking strategy, which helped contribute to their recovery. But that wasn’t the only problem they had… there were several key changes we made to their site, content, etc. in order for them to recover. I hope that helps.

One more thing: Why should some of the links on the one site have been no-follow? If they weren’t paid for links then why the need to no-follow them?

I can’t elaborate too much, but there was a business relationship between the site and the companies it was linking to. They weren’t natural links… so they should be nofollowed. I hope that helps.

Gotcha. That makes more sense.

Glenn, is one partner link on a separate class C ip on an otherwise perfect site enough to stay hit by Panda? My UK movie blog (Future Movies) has not recovered since 2011 when I was pressured into published the odd press release by PR companies. Now all these are deleted but still no recovery with 90%+ down in traffic. No obvious scraper just the odd pinched article that are filtered out under supplemental for an exact sentence query.

No, I wouldn’t think one partner link is going to get you hit by Panda. There are typically a number of problems present on a site that gets hit by Panda, and I would have to analyze the site to get a better feel for what’s going on. If you want to send along a link to your site via email, I might be able to take a quick look.

Finally someone dug into this and gave it some solid thought and analysis. Thank you.

Of the factors you mention, which seemed to be most definitely correlated with traffic loss for any of the four sites? Said another way, which of these four sites can you most confidently advise on what the problem was/is?

Thanks Andrew. I’m glad you found my post helpful. Until I analyze more sites, it’s hard to see which ones were the driving force behind the drop in rankings. That said, the excessive cross-linking of owned sites using exact match anchor text clearly was a problem. In addition, the followed links to many websites was also a clear problem (when there was a business relationship between the sites). They definitely should have been nofollowed.

Next in line would be the scraping issues. Based on my analysis, you could easily see why Google would have a problem with how the sites were structured content-wise. I hope that helps!

That definitely helps and again, thank you. One more for ya if you have the time and this one is a little more specific:

If you have a blog on a subdomain and you are pointing a majority of followed exact match links back to your primary domain, do you think this qualifies under the new Penguin as a poor cross-linking strategy?

To clarify, we don’t know if that was Penguin 2.0. It could be, or it could be some other targeted action.

Regarding your question, I would have to analyze the situation before explaining the potential impact. It’s normal to link between subdomains, but the question really becomes how overboard is the linking and how much exact match anchor text is used.

client I audited in January hadn’t implemented most of my action plan yet and got slammed by this one – peak daily traffic down 25% and weekend low traffic down by 35% – it’s a 10 million page site. I’ll be digging in over the next couple weeks to understand more, however they had previously been slammed by Panda and Penguin so who the heck knows specifics…

Wow, that’s classic. Put off changes and then they get hammered. I be they’ll never do that again. :)

Interesting to hear they were hit by Panda and Penguin previously. As you saw, one of my findings was that all four sties I analyzed were hit previously.

I always thought that Penguin 2.0 would be a much more aggressive update, and that it would be broader (catching more webspam). If what we saw was Penguin 2.0, that very well could be the case. I saw both link and content issues during my analysis. If it is targeting broader webspam issues, we’re in for a heck of an update.

Yeah the site is a mess from every angle so I’m not surprised it got hit whatever the update was. And I expect further hits in the next couple months until they clean out the insanity.

Our main site was hit by this update, but in a unusual way. We have a product category page and a product faq page. For example when you would search for dog bowls in google the “dog bowls” category page would rank and the faq page would be further back in the results. This algorithm change on May 8th took many of our category pages out of the top 50 results and our faq pages would now rank higher then the category pages previously ranked. (Before: Category page would rank 12, faq page would rank 40. After: Category page would not be in top 50, faq page would rank 8. For us the faq pages do not really make sales and is not really the page people would want to find if they search for dog bowls in google.

I have been holding off making any changes until I read some peoples thoughts about this update. We do have a site wide link to 2 websites that we also own. These were followed links, so I have now changed them to “no follow”. Also the category pages that disappeared from the top 50 did have follow links to the products official company page. We have now made those “no follow” as well.

Also I should note that we have only been postively effected by previous google updates.

Thanks for explaining your situation Jon, and that’s very interesting what happened after the update on the 8th. If you want to email me your domain, I can definitely take a look. Also, were you affected by the official Penguin 2.0 rollout (which went into effect last night at ~7PM ET?)

From looking at some test sites I’m actually noticing a change in the way the algorithm is handling “relevancy” coming from topically related sites that have links, sans keywords. This could be related to a downgrade of “profile type” links that would be easy to create on a massive scale with relevant surrounding content.

This is all preliminary but nonetheless very interesting thus far. Oh, and some black hatters are still kicking ass in the SERPS, unaffected…

Thanks for your comment Chris. Regarding black hat sites, we’ll know more within the next few days how hard some got hit. But you’re right, I see some sites still ranking that probably shouldn’t. Look for future posts about Penguin 2.0 here on my blog. I’m already analyzing some that were officially hit by 2.0.

Hi Glenn,

Really glad to see this post because many weren’t aware of the May 8 traffic drop. I believe your theory that it was pre-testing of Penguin 2.0 is true. I know an ecommerce site that was severely hit, losing about 58% of their Google traffic starting May 8.

They have two blogs, one on a sub-domain and one on another domain that probably both link to the store. That is a similar scenario to what you’ve seen. They were already working on link clean-up, but were not done. They do have a top SEO working on analysis (on retainer prior to this hit at my recommendation).

Ecommerce stores have it tough figuring out what to do about Google and their unreasonable algorithms. As another commenter said, if you have an entire page of one product, what are you supposed to do? Not name a product what it is for SEO reasons and hope the buyers figure it out?

When you have a blog about your products it makes sense to link to the product page in the text and from images in the posts. But if you only sell hundreds of different types of the same product, the keyword phrases are going to be similar much of the time.

Hopefully Bill will know what to do, because I sure don’t know what to tell them. Logic and what works best for the customers may not work when it comes to Google – and never mind that their CEO has said their intention is to favor big brands so no matter what you do, you may be toast eventually.

On a couple of my sites I have cross-linking going on in the footers of the page, using just the business name as anchor text. Do you think this kind of linking would be something Google is cracking down on?

It really depends on how bad the situation is with your cross-linking. I would be careful with how you implement that approach. Using branded anchor text would be best, but you might want to nofollow them. There is a good chance that those sitewide footer links are devalued anyway. :)

My blog also heavily hit this may 9th and 22nd updates. I never follow any black hat SEO methods. So sad. On 9th May, lost nearly 21% and 22nd lost nearly 22% traffic. I saw few scrap wordpress and blogger blogs added my domain into their sidebar. As a result of that, I have more than 100 backlinks from that sites. I already submitted to diswove them, hope big G will consider it. :(

I’m sorry to hear that Chris. Now that I’ve analyzed both Phantom and Penguin 2.0 heavily, Phantom seems much more content-focused. Penguin 2.0 is still heavily link-focused (like Penguin 1.0 was).

Have you checked out my latest post covering Penguin 2.0 findings? http://www.hmtweb.com/marketing-blog/penguin-2-initial-findings/

Thank you so much Glenn, your reply. It’s more valuable. :D

Did you find anymore information to lead you towards what this update was directly about? its definitely effected some of our sites but not in a huge way. Hard to put a steady plan together when you are not certain what the issue is. Standout issues is that its link related (legacy stuff) but could be content (bit of duplicate)

One of the sites is an authority, dictionary word site which is referenced all over the web using that one word as the anchor however its all natural. E.g. swimming.com. Looks like its been caught up in collateral.

Yes, I’ve been analyzing several sites hit by Phantom, and I’ve had many companies reach out to me that have gotten hit. It was definitely bigger than some thought.

Phantom was extremely content-based (and not Penguin-focused, unnatural link-focused, etc.) Most of the sites that got hit were originally hit by Panda (which makes sense given what I’m finding). I’m uncovering a number of different factors that led to the Phantom hit, but again, they are all content-based.

It would be hard to say what your specific issue is without analyzing the site in question. But if you were hit by Phantom, I would analyze the site through a content lens. Similar to what you would do if hit by Panda. I hope that helps.

Thanks Glenn good to hear some information from others

The sites have previously had content issues and likely effected by Panda in the past. The authority site had scraped content in the blog however that had been removed before the 9th.

Possibly the 9th was the start of a panda 10 day roll out.

Hi Glenn,

We had a client site that got hit by Phantom, and potentially Penguin 2.0. We’ve made a number of changes that I believe will help with traffic recovery.

But I wanted to add to the conversation by pointing out something strange that seemingly contradicts what you are saying with prior negative effects from Panda and Penguin updates – using AlgoSleuth we were able to determine that traffic INCREASED significantly following the rollout of Panda 1.0, 2.0, 3.2, and 3.4. Moreover, we’ve only ever seen one slightly negative effect from a Panda or Penguin update (Panda #23. Which coincides with the December holiday season – a very reasonable explanation for the slight decrease.)

At second glance, I guess that this still further solidifies the thought that Phantom is related to Panda, but I find it strange that our client was seemingly helped by Panda, and then hurt by Phantom. Any thoughts on this?

Thanks!

Very interesting. I would have to analyze the site to gain a better understanding of the content, what’s changed, etc. Did the site in question change its content strategy over time?

Also, you should check out my SEW column about the misleading surge in traffic prior to an algorithm hit. That could be the case too (if traffic was increasing prior to Phantom). http://searchenginewatch.com/article/2243421/The-Misleading-Sinister-Surge-in-Traffic-Before-Google-Panda-Penguin-Struck

Yes my blog haven 10k daily pass to 2k

Hey, Glenn.

I´m really glad I just found your blog post by coincidence! I know it´s old already.

If you check a screenshot of my traffic last 9 months, i think my site was hit by Panda and then by Phantom. http://i.imgur.com/QIxV7og.png

I have read MANY articles regarding this now, and looking on how to solve this.

After doing most of the things you suggest, what should I do then just wait and hope it helps or should i contact google through GWT?

Thanks again Glen!

Regards