On Tuesday, September 23, Google began rolling out a new Panda update. Pierre Far from Google announced the update on Google+ (on Thursday) and explained that some new signals have been added to Panda (based on user and webmaster feedback). The latter point is worth its own blog post, but that’s the not the focus of my post today. Pierre explained that the new Panda update will result in a “greater diversity of high-quality small- and medium-sized sites ranking higher”. He also explained that the new signals will “help Panda identify low-quality content more precisely”.

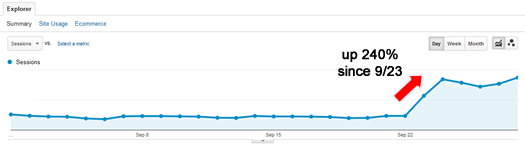

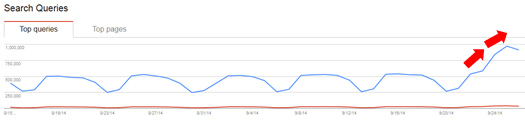

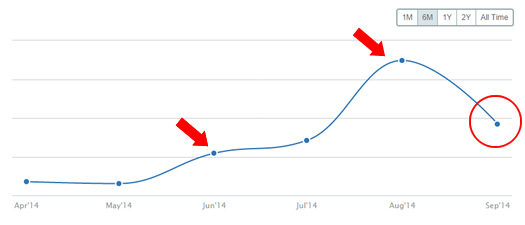

I first spotted the update late on 9/23 when some companies I have been helping with major Panda 4.0 hits absolutely popped. They had been working hard since May of 2014 on cleaning up their sites from a content quality standpoint, dealing with aggressive ad tactics, boosting credibility on their sites, etc. So it was amazing to see the surge in traffic due to the latest update.

Here are two examples of recovery during Panda 4.1. Both clients have been making significant changes over the past several months:

As a side note, two of my clients made the Searchmetrics winners list, which was released on Friday. :)

A Note About 4.1

If you follow me on Twitter, then you already know that I hate using the 4.1 tag for this update. I do a lot of Panda work and have access to a lot of Panda data. That enables me to see unconfirmed Panda updates (and tremors). There have been many updates since Panda 4.0, so this is not the only Panda update since May 20, 2014. Not even close actually.

I’ve written heavily about what I called “Panda tremors”, which was confirmed by John Mueller of Google. Also, I’ve done my best to write about subsequent Panda updates I have seen since Panda 4.0 here on my blog and on my Search Engine Watch column. By the way, the latest big update was on 9/5/14, which impacted many sites across the web. I had several clients I’ve been helping with Panda hits recover during the 9/5 update.

My main point here is that 4.1 should be called something else, like 4.75. :) But since Danny Sullivan tagged it as Panda 4.1, and everybody is using that number, then I’ll go with it. The name isn’t that important anyway. The signature of the algo is, and that’s what I’m focused on.

Panda 4.1 Analysis Process

When major updates get rolled out, I tend to dig in full blast and analyze the situation. And that’s exactly what I did with Panda 4.1. There were several angles I took while analyzing P4.1, based on the recoveries and fresh hits I know of (and have been part of).

So, here is the process I used, which can help you understand how and why I came up with the findings detailed in this post.

1. First-Party Known Recoveries

These are recoveries I have been guiding and helping with. They are clients of mine and I know everything that was wrong with their websites, content, ad problems, etc. And I also know how well changes were implemented, if they stuck, how user engagement changed during the recovery work, etc. And of course, I know the exact level of recovery seen during Panda 4.1.

2. Third-Party Known Recoveries

These are sites I know recovered, but I’m not working with directly. Therefore, I use third party tools to help identify increases in rankings, which landing pages jumped in the rankings, etc. Then I would analyze those sites to better understand the current content surging, while also checking the previous drops due to Panda to understand their initial problems.

3. First-Party Known Fresh Hits

Based on the amount of Panda work I do, I often have a number of companies reach out to me with fresh Panda hits. Since these are confirmed Panda hits (large drops in traffic starting when P4.1 rolled out), I can feel confident that I’m reviewing a site that Panda 4.1 targeted. Since Tuesday 9/23, I have analyzed 21 websites (Update: now 42 websites) that have been freshly hit by Panda 4.1. And that number will increase by the end of this week. More companies are reaching out to me with fresh Panda hits… and I’ve been neck deep in bamboo all weekend.

4. Third-Party Unconfirmed Fresh Hits

During my analysis, I often come across other websites in a niche with trending that reveals a fresh Panda hit. Now, third party tools are not always accurate, so I don’t hold as much confidence in those fresh hits. But digging into them, identifying the lost rankings, the landing pages that were once ranking, the overall quality of the site, etc., I can often identify serious Panda candidates (sites that should have been hit). I have analyzed a number of these third-party unconfirmed fresh hits during my analysis over the past several days.

Panda 4.1 Findings

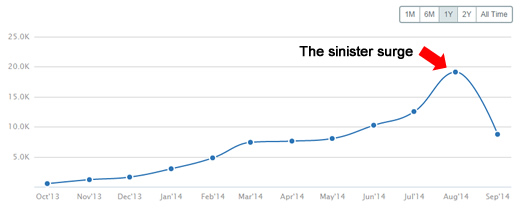

OK, now that you have a better understanding of how I came up with my findings, let’s dig into actual P4.1 problems. I’ll start with a note about the sinister surge and then jump into the findings. Also, it’s important to understand that not all of the sites were targeted by new signals. There are several factors that can throw off identifying new signals, such as when the sites were started, how the sites have changed over time, how deep in the gray area of Panda they were, etc. But the factors listed below are important to understand, and avoid. Let’s jump in.

Sinister Surge Reared Its Ugly Head

Last year I wrote a post on Search Engine Watch detailing the sinister surge in traffic prior to an algorithm hit. I saw that phenomenon so many times since February of 2011 that I wanted to make sure webmasters understood this strange, but deadly situation. After I wrote that post, I had many people contact me explaining they have seen the exact same thing. So yes, the surge is real, it’s sinister, and it’s something I saw often during my latest analysis of Panda 4.1.

By the way, the surge is sinister since most webmasters think they are surging in Google for the right reasons, when in fact, Google is dishing out more traffic to problematic content and gaining a stronger feel for user engagement. And if you have user engagement problems, then you are essentially feeding the mighty Panda “Grade-A” bamboo. It’s not long after the surge begins that the wave crashes and traffic plummets.

Understanding the surge now isn’t something that can help Panda 4.1 victims (since they have already been hit). But this can help anyone out there that sees the surge and wonders why it is happening. If you question content quality on your website, your ad situation, user engagement, etc., and you see the surge, deal with it immediately. Have an audit completed, check your landing pages from Google organic, your adjusted bounce, rate, etc. Make sure users are happy. If they aren’t, then Panda will pay you a visit. And it won’t be a pleasant experience.

Affiliate Marketers Crushed

I analyzed a number of affiliate websites that got destroyed during Panda 4.1. Now, I’ve seen affiliate marketers get pummeled for a long time based on previous Panda updates, so it’s interesting that some affiliate sites that have been around for a while just got hit by Panda 4.1. Some sites I analyzed have been around since 2012 and just got hit now.

For example, there were sites with very thin content ranking for competitive keywords while their primary purpose was driving users to partner websites (like Amazon and other ecommerce sites). The landing pages only held a small paragraph up top and then listed affiliate links to Amazon (or other partner websites). Many of the pages did not contain useful information and it was clear that the sites were gateways to other sites where you could actually buy the products. I’ve seen Google cut out the middleman a thousand times since February of 2011 when Panda first rolled out, and it seems Panda 4.1 upped the aggressiveness on affiliates.

I also saw affiliate sites that had pages ranking for target keywords, but when you visited those pages the top affiliate links were listed first, pushing down the actual content that users were searching for. So when you are looking for A, but hit a page containing D, E, F, and G, with A being way down the page, you probably won’t be very happy. Clearly, the webmaster was trying to make as much money as possible by getting users to click through the affiliate links. Affiliate problems plus deception is a killer combination. More about deception later in the post.

Affiliates with Blank and/or Broken Pages

I came across sites with top landing pages from Google organic that were broken or blank. Talk about a double whammy… the sites were at risk already with pure affiliate content. But driving users to an affiliate site with pages that don’t render or break is a risky proposition for sure. I can tell you with almost 100% certainty that users were quickly bouncing back to the search results after hitting these sites. And I’ve mentioned many times before how low dwell time is a giant invitation to the mighty Panda.

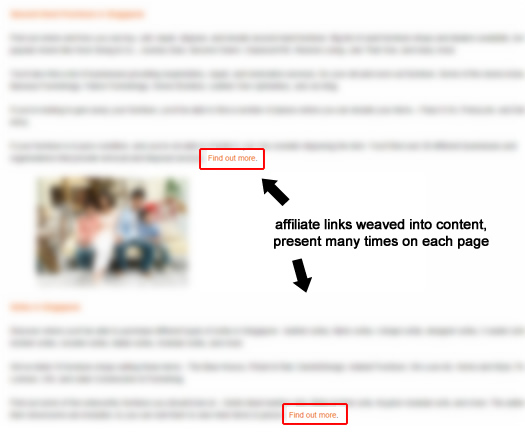

Doorway Pages + Affiliate Are Even Worse

I also analyzed several sites hit by Panda 4.1 that held many doorway pages (thin pages over-optimized for target keywords). And once you hit those pages, there were affiliate links weaved throughout the content. So there were two problems here. First, you had over-optimized pages, which can get you hit. Second, you had low-quality affiliate pages that jumped users to partner websites to take action. That recipe clearly caused the sites in question to get hammered. More about over-optimization next.

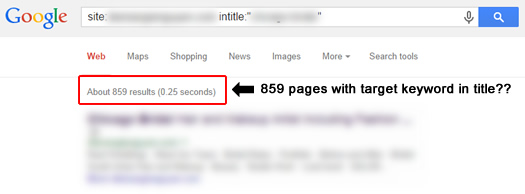

Keyword Stuffing and Doorway Pages

There seemed to be a serious uptick in sites employing keyword stuffing hit by Panda 4.1. Some pages were completely overloaded in the title tag, metadata, and in the body of the page. In addition, I saw several examples of sites using local doorway pages completely over-optimized and keyword stuffed.

For example, using {city} + {target keyword} + {city} + {second target keyword} + {city} + {third target keyword} in the title. And then using those keywords heavily throughout the page.

And many of the pages did not contain high quality content. Instead, they were typically thin without useful information. Actually, some contained just an image with no copy. And then there were pages with the duplicate content, just targeted to a different geographic location.

The websites I analyzed were poorly-written, hard to read through, and most people would probably laugh off the page as being written for search engines. I know I did. The days of stuffing pages and metadata with target keywords are long gone. And it’s interesting to see Panda 4.1 target a number of sites employing this tactic.

Side Note About Human Beings:

It’s worth reiterating something I often tell Panda victims I’m helping. Actually, I just mentioned this in my latest Search Engine Watch column (which coincidentally went live the day after P4.1 rolled out!) Have neutral third parties go through your website and provide feedback. Most business owners are too close to their own sites, content, ad setup, etc. Real people can provide real feedback, and that input could save your site from a future panda hit.

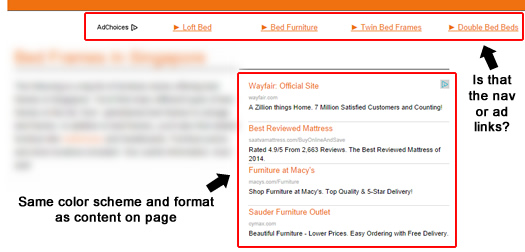

Deception

I analyzed several sites hit by Panda 4.1 with serious ad problems. For example floating ads throughout the content, not organized in any way, blending ads with content in a way where it was hard to decipher what was an ad and what was content, etc.

I mentioned deception in the past, especially when referring to Panda 4.0, but I saw this again during 4.1. If you are running ads heavily on your site, then you absolutely need to make sure there is clear distinction between content and ads. If you are blending them so closely that users mistakenly click ads thinking it was content, then you are playing Russian roulette with Panda.

Users hate being deceived, and it can lead to them bouncing off the site, reporting your site to organizations focused on security, or to Google itself. They can also publicly complain to others via social networks, blogging, etc. And by the way, Google can often pick that up too (if those reviews and complaints are public.) And if that happens, then you can absolutely get destroyed by Panda. I’ve seen it many times over the years, while seeing it more and more since Panda 4.0.

Deception is bad. Do the right thing. Panda is always watching.

Content Farms Revisited

I can’t believe I came across this in 2014, but I did. I saw several sites that were essentially content farms that got hammered during Panda 4.1. They were packed with many (and sometimes ridiculous) how-to articles. I think many people in digital marketing understand that Panda was first created to target sites like this, so it’s hard to believe that people would go and create more… years after many of those sites had been destroyed. But that’s what I saw!

To add to the problems, the sites contained a barebones design, they were unorganized, weaved ads and affiliates links throughout the content, etc. Some even copied how-to articles (or just the steps) from other prominent websites.

Now, to be fair to Google, several of the sites were started in 2014, so Google needed some time to better understand user engagement, the content, ad situation, etc. But here’s the crazy thing. Two of those sites surged with Panda 4.0. My reaction: “Whhaatt??” Yes, the sites benefitted somehow during the massive May 20 update. That’s a little embarrassing for Google, since it’s clearly not what they are trying to rise in the rankings…

But that was temporary, as Panda 4.1 took care of the sites (although late in my opinion). So, if you are thinking about creating a site packed with ridiculous how-to articles, think again. And it goes without saying that you shouldn’t copy content from other websites. The combination will surely get you hit by Panda. I just hope Google is quicker next time with the punishment.

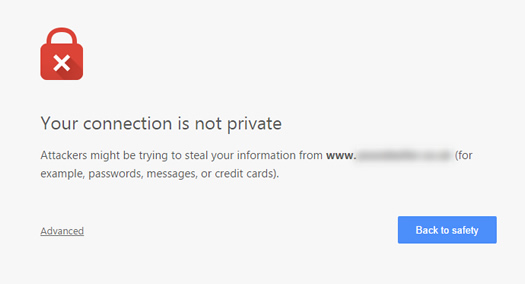

Security Warnings, Popup Ads, and Forced Downloads

There were several sites I analyzed that had been flagged by various security and trust systems. For example, several sites were flagged as providing adware, spyware, or containing viruses. I also saw several of the sites using egregious popups when first hitting the site, forcing downloads, etc.

And when Panda focuses on user engagement, launching aggressive popups and forcing downloads is like hanging fresh bamboo in the center of your websites and ringing the Panda dinner bell. Users hate popups, especially when it’s the first impression of your site. Second, they are fearful of any downloads, let alone ones you are forcing them to execute. And third, security messages in firefox, chrome, antivirus applications, WOT, etc. are not going to help matters.

Trust and credibility are important factors for avoiding Panda hits. Cross the line and you can send strong signals to Google that users are unhappy with your site. And bad things typically ensue.

Next Steps:

Needless to say, Panda 4.1 was a big update and many sites were impacted. Just like Panda 4.0, I’ve seen some incredible recoveries during 4.1, while also seeing some horrible fresh hits. Some of my clients saw near-full recoveries, while other sites pushing the limits of spamming got destroyed (dropping by 70%+).

I have included some final bullets below for those impacted by P4.1. My hope is that victims can begin the recovery process, while those seeing recovery can make sure the surge in traffic remains.

- If you have been hit by Panda 4.1, then run a Panda report to identify top content that was negatively impacted. Analyzing that content can often reveal glaring problems.

- Have an audit conducted. They are worth their weight in gold. Some webmasters are too close to their own content to objectively identify problems that need to be fixed.

- Have real people go through your website and provide real feedback. Don’t accept sugarcoated feedback. It won’t help.

- If you have recovered, make sure the surge in traffic remains. Follow the steps listed in my latest Search Engine Watch column to make sure you aren’t feeding Google the same (or similar) problems that got you hit in the first place.

- Understand that Panda recovery takes time. You need to first make changes, then Google needs to recrawl those changes (over time), and then Google needs to be measure user engagement again. This can take months. Be patient.

- Understand that there isn’t a silver Panda bullet. I usually find a number of problems contributing to Panda attacks during my audits. Think holistically about user engagement and then factor in the various problems surfaced during an audit.

- Last, but most importantly, understand that Panda is about user happiness. Make sure user engagement is strong, users are happy with your content, and they don’t have a poor experience while traversing your website. Don’t deceive them, don’t trick them into clicking ads, and make a great first impression. If you don’t, those users can direct their feedback to Panda. And he can be a tough dude to deal with.

Summary – Panda 4.1 Reinforces That Users Rule

So there you have it. Findings based on analyzing a number of websites impacted by Panda 4.1. I will try and post more information as I get deeper into Panda 4.1 recovery work. Similar to other major algorithm updates, I’m confident we’ll see Panda tremors soon, which will bring recoveries, temporary recoveries, and more hits. Strap on your SEO helmets. It’s going to be an interesting ride.

GG

very very thanks to this detailed report. I check my site from all these parameters where my site is lacking because there is direct effect on my site due to this google animal.

Thanks Sourabh. I’m glad my post was helpful. And definitely check out the various posts I linked to (my previous posts about Panda). I’m sure they can help too.

I posted a screen grab of a web page I have been monitoring for a particular search term. https://plus.google.com/118093341804572997553/posts/XFzKvuvTfFX

It looks to me like it was the Panda4.1 update that rewarded its bad content so it has moved from 3rd to 2nd. In this case I think it swapped positions with Tripadvisor which is now 3rd. Basically it looks like Panda simply moved Tripadvisor down and left the bad website alone. So it seems Panda does not target that kind of spammy link.

I am of course concentrating on an exception here but it is fascinating.

Thanks for the comment Chris. Panda is a domain-level algorithm, so it’s hard to say what the rest of the domain looks like. If that page is an outlier, then I can see how it slipped through the cracks. But if the domain contains many pages like that, then you might be on to something.

By the way, I often say that Panda should have been called “Octopus”, since it has many tentacles. There are a number of factors taken into account with Panda, and there typically isn’t one specific problem. I usually find a number of problems when sites impacted by Panda.

Also, I found the domain you are referring to and I’ll take a quick look later today. :) Thanks again.

Hi Glen, Thanks for getting back and taking a look. That technique does seem to be used quite a bit throughout the site although it has other factors in its favour. I’ll watch it too.

I like your idea of the octopus. I imagine Panda, like other ranking algorithms to be made up of a series of processes running through lists at their own speed and triggering other process under certain conditions so it might be hit in a week or so.

As for the Sinister Surge, is it possible that this Panda update has some penalty overlap with other algorithms which had their effect removed before the update to prevent over-penalising and causing upset?

Thanks also for your detailed post!

Hi Glenn, Just to update you on that spammy text that Google loves. The website with that contrived link text in the screen grab above is still ranking at 2 on google.co.uk and sometimes it ranks top. It is mind boggling.

Interesting, can you email me the domain. I think I remember it went you first posted this, but I want to make sure I’m looking at the correct site. Thanks Chris.

Hi Glenn, thank you very much for sharing your advice and insights, I’m sure many webmasters who have been hit by Panda 4.1 would appreciate this article.

I’ve dropped you an email with some details pertaining to this post, please do take a look and let me know your thoughts, thank you!

Thanks, I’m glad my post was helpful. I’ll check your email later today and get back to you!

Excellent post, Glenn!

All that shit that used to be “cool” ain’t cool anymore.

Thanks Trond! Yes, now that Google can measure user engagement, those tactics are under even more scrutiny. The user rules, so webmasters need to be extremely aware of what’s going on their own sites and how users are reacting to those tactics. When Google picks up unhappy users, it sends Panda along to take care of business.

Avoid bamboo like the plague. It won’t end well. :)

> now that Google can measure user engagement

Would you mind elaborating on how Google actually measures user engagement? I mean, where does it technically take the data from?

There are several ways that Google can measure user happiness and engagement. One of the easiest ways that Google (and Bing) measure engagement is through dwell time. Low dwell time is a giant invitation to the mighty Panda. Note, dwell time is measured by niche… so coupon sites are different than news publishers.

Duane Forrester from Bing has mentioned dwell time several times in his blog posts (and presentations). That’s why adjusted bounce rate is so important to implement. You can get a much stronger feel for engagement and time on page. I hope that helps.

A hat tip for you Glenn, that’s one of the better Panda posts I’ve read in a long time.

Like yourself we also assist clients on various penalties and also belong to a data sharing network.

We all know that Google is opaque so historical data through experience becomes ever more important when trying to figure out penalty issues.

I want to share my experience of the data surge.

We have also seen this on a number of occasions and for me I feel we are beginning to see certain patterns on this subject.

From the previous 3 published Panda updates we started to analyse the surge trying to work out what may be going on.

We’ve noted that all sites that experienced a surge then drop also carry other penalties, mainly Penguin. I’m not talking about a lot of sites, maybe 20+.

But it was clear to us that the sites benefiting from Panda and kept the benefit had no link or other penalty related issues.

So we’re beginning to think that the surge may also be connected to the fact that the surge sites had other penalties.

Could it be the case that Panda required other penalties to be dropped before assessing and benefiting from Panda, then reapplied the other penalties.

We know that Penguin is a site wide penalty could well bring down a site that benefited from Panda back to where it was due to the reapplication of Penguin, and or, other.

I agree with you that further hits for Google testing may be the answer but I also believe that other factors may also be playing a part.

It’s early days in our findings but after 2 further published updates we should be in a better position to publish the results.

William

Thanks this is helpful…I’m guessing I got hit because of over optimization. I just went back through my pages and yes….I did put in too many keywords…just a habit…just trying to be descriptive.

But what’s annoying is that I have far more content and more pictures then any of my competition’s weak pages….yet I’m penalized ;(

I’m sorry to hear you were hit by Panda 4.1. During my analysis, it was clear to see that keyword stuffing was prevalent on a number of the sites hit by 4.1. I would definitely go through your pages and cut down on that.

The sooner the better, as I’ve already seen our first Panda 4.1 tremor. There was a lot of movement on 9/29, even if with sites that were already impacted by P4.1. I expect to see several more tremors over the coming days and week. I hope that helps.

Interesting read. I tell people Always write for readers and not the search engine. Those ‘keywords’ fall into place naturally. Your site, blog, etc is not about you or Google its for your viewers so make it about them. You wont have to worry when Google changes if its about your viewers.

Thanks for your comment Kelly, and great point. I definitely saw an uptick in keyword stuffing during my analysis of sites hit by Panda 4.1. And I completely agree with you. Don’t think about keywords, just write a thorough post, article, or page about a specific topic and you will naturally use those keywords anyway!

Thanks for the article. I’m struggling because a few of my articles require me to repeat keywords – such as a list of all the widgets that allow you to download, for example.

Therefore the word ‘download’ appears in every single entry as my page is trying to make it easier for people to find information by consolidating it all into one place – user feedback I have received is good – there isn’t another site that consolidates all of this information, and a user instead would have to look through every single retailer website to find this information out.

How do I avoid something like that? Simply just omitting the word ‘download’ completely? In my opinion, that would make my article less readable to a normal person and instead more geared to search engines!

Karen, sorry for the delay in responding! Yes, I see that often with certain types of sites. But there are smart ways to cut down unnatural sounding/looking copy and links. I would have to see the exact page or site to provide specific feedback, but I’ve helped a number of companies with similar situations.

Also, it’s worth having a focus group go through the site (unbiased). You want real feedback from real people (and not sugarcoated feedback). You might find it’s not a problem… but you also might find it is. I hope that helps!

Interesting read Glenn.

I had some questions though. It’s understandable that affiliate websites would be penalized for thin content. Since many of them want to push users as fast as possible to other websites. However, would that be directly correlated with bounce rate? If a website has thin content, but the user spends a lot of time shopping around looking for the right deal – would that be a flag to the almighty panda? Or even vice versa; if the page has a bunch of quality content but users tend to leave would that be a flag?

Hi Andrew. Low-quality affiliate content is a killer for Panda. Although the point is to drive users downstream, many affiliate marketers have a serious problem with engagement and site credibility. Deception runs rampant on some affiliate sites.

Engagement-wise, I always recommend trying to provide some type of value-add. If not, many affiliate sites are susceptible to a Panda attack. Dwell time is measured by niche, so some will be lower on average than others. But in aggregate, many users visiting a site from the search results and clicking back to the SERPs quickly can send horrible signals to the engines.

BTW, standard bounce is flawed. You should implement adjusted bounce rate to get a stronger feel for actual engagement. Check out my post for more details -> http://www.hmtweb.com/marketing-blog/adjusted-bounce-rate-in-google-analytics/

I’m concerned about the section on ads and how they can be mistaken for links on the site. Your example picture looks to be using google adsense ads. So does that mean that google will penalize you for using their own ads? Or is this just a lesson not to style the ads with the same exact text color, font etc?

Thanks for your comment Julie. The real lesson is about user engagement and the impact that deception can have (Panda-wise). I’ve seen deception rear its ugly head many times (especially since Panda 4.0).

It makes a lot of sense actually. If a site tricks users into clicking ads for their own benefit, then that can enrage users. In aggregate, Google can pick this up. And when Google picks up poor user engagement, Panda can stomp all over the site.

I would be very careful when placing ads on your site. I would make sure they are clearly labeled, organized, etc. And I agree that Google’s own advice (ad-wise), is not the best advice (SEO-wise). I could write an entire post on that subject, though. :)

It just seemed funny to me that the example picture shows google ads as being bad practice :)

Right, and they even have a page explaining how to blend ads. Ugh. I’ve seen deceptive ads cause many problems Panda-wise. I would be very careful with your approach. :)

First, thanks for the great post covering so many possibilities. I think most of this is sound advice although it would be nice to see some numbers of whether these types of things are actually predictive of Panda losses.

That being said, the “sinister surge” is very interesting. I think it is actually the result of the standard testing model that Google uses. Most of the sites that get hit by Panda are in spaces where others likely to be hit by Panda are. When testing rolls out, lets say at 10% strength, you would expect to see some growth as long as your site wasn’t included in the testing.

Great article, once again.

Thanks for your comment Ryan. I’ve read several of your posts over the past year or so too. During my analysis, I was looking for similar trends across sites hit by P4.1 (that maybe weren’t apparent or active in previous versions of Panda). And now that I’ve analyzed over 40 sites hit by Panda 4.1, I’m even a stronger believer in my original analysis (listed above). :)

I’ve written before that Panda should have been named “Octopus”, since it has many tentacles. As Panda gets more complex, which it’s clearly doing, that analogy makes even more sense. To me, any factor that negatively impacts user engagement could eventually become part of Panda. As an example, I’ve now seen keyword stuffing across a number of sites impacted by P4.1. It’s hard to believe that wasn’t added to the algo in some manner.

Regarding the sinister surge, I’ve seen that so many times over the past few years that I had to write about it (which I did in my Search Engine Watch column). Then I had many others contact me about the same trending! It’s really interesting to see how Google does that… and it is sinister. Most webmasters think it’s positive, when in fact, Google is simply collecting more engagement data. Then boom, the wave crashes.

Anyway, thanks for your comment. I plan to write more in the coming weeks based on my latest Panda analysis. Stay tuned.

Thank you, Glenn! Very interesting read. I’ve heard various opinions on whether or not nofollow affiliate links have an effect. What are your thoughts?

Thanks Camille. I do believe that has an effect. And it’s a problem I see often when analyzing affiliate websites impacted by Panda (followed affiliate links). All affiliate links should be nofollowed. That’s literally a check in my audit process. :) I hope that helps.

Thank you, Glenn! That helps a lot. I’ve always used nofollowed affiliate links but I recently read that nofollowed links could still have a negative impact. I thought that was impossible, but with all of the changes I wasn’t sure. Thank you so much for your help!

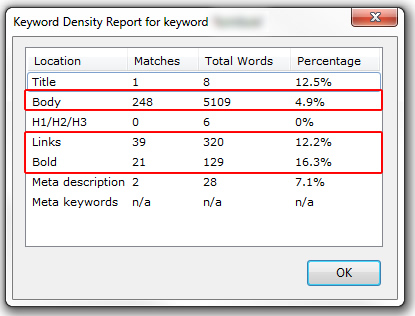

Glenn – awesome Panda article as always. Got a question – what is that tool u screenshot for keyword density? http://www.hmtweb.com/images/panda41-keyword-density.jpg

Thank you Bartosz! I appreciate it. I’m glad my post was helpful. That’s the SearchStatus plugin for Firefox. Has a bunch of nifty functionality for checking page details. Just right click and select “Show Keyword Density”. I hope that helps.

Great post. We’ve been seeing a lot of the same issues with internal and client sites. Our biggest hit started back in v4.0 but we’ve seen another adjustment in 4.1 down with a nationwide directory type site. It only shows results (indexed pages) in areas where there is a provider presence, but we’ve found it difficult to come up with unique content for each specific geo. On the one hand we can take away content that’s replicated on each city page and just leave the listings, but that makes the pages very bare. Or we can add the content, but that gives you hundreds of pages of duplicate content. We are exploring the idea of using #urls for some of the content. We don’t have any data to support this idea, but it’s worth a shot.

My site gone out of rank from 1 page to 18 page now.. Can you suggest me something to get back.

Thank you

You need to analyze your site through a Panda lens (targeting low-quality content). I would audit your site, target problematic content, and then act (that can mean nuking the content, rewriting it, etc.) You should check out my post about running a Panda report via Google Analytics. That might get you moving in the right direction.

Hey, Glen,

When you say there have been many updates since Panda 4.0, are you not referring to refreshes–which would be normal of course, since Panda is pretty much moving into the Everflux–or are you talking signals?

keep up the good work,

-Matt

Matt, I’m referring to refreshes. I’ve seen near-weekly updates since Panda 4.0. Google tends to confirm updates when signals change (but there’s not guarantee of that happening).

Also, I haven’t seen a Panda tremor in a while… Google may be holding off until the holiday season is done (and they are definitely focusing on Penguin right now). Just an fyi.

Hi Glenn,

Thank you for the analysis above, I had pleasure reading it.

I have a question for you or any one who is able to give me a good response.

I have a project in my mind to run a Website using datafeedr + WooCommerce, the niche which I am talking about should have about 20K-50K products.

Now is it safe to do run everything automated or i need to give a unique description to each product ? What do you think?

Or I can just continue adding the description as in the product web.. ?

Thank you in advance :)

Hard to say without knowing the source of the data. If you are working with manufacturer descriptions, then Google is on record saying they won’t hurt you (since many ecommerce retailers deal with that situation). I just posted a link to a webmaster hangout video yesterday with John Mueller addressing that question. Here’s the link -> https://www.youtube.com/watch?v=R5Jc2twXZlw&t=22m08s

That said, I would not go down the path of a completely autogenerated site. I would still try and provide as much unique and valuable information you can on product pages, category pages, etc. John addresses that in the video as well, and explains how that can help you. I hope that helps.

Hello Glenn. I have an affiliate website, and I think it has been hitted by Panda. Can be this the sinister surge? My site is camisetasdejugadores.com Thanks

What was the date the site saw a drop in Google organic traffic? I can check my dates and let you know. Has the site rebounded at all since then?

The drop started on October. My site was keyword stuffed, yesterday I finished changing all posts one by one. Nowadays I still being affected.

I maintain our small business’ website, and have been trying to figure out why I noticed our traffic suddenly go from 30-50 clicks per day down to 0-4. Seeing as how the drop happened on September 23rd, I’m guessing we probably got hit by something related to this. (Sept. 22nd=42 clicks. Sept. 23rd=2 clicks.) Nothing has really changed on our site recently, but we did become a Carbonite partner and I included a link in our footer page to our Carbonite affilite page. Do you think I’m being falsely targeted and penalized? I’ve talked to a rep at Google, and we went over my adwords account and he suggested some changes there which are helping a LITTLE, but I’m not nearly back up to where we were. (greatsoutherncomputers.com) if you’re interested. We’re actually the oldest computer store in Louisiana, and our website is mostly just an informational page about our products and services. Thanks.

Interesting, if you were hit on 9/23, then it was definitely Panda. Have you audited the site yet through a Panda lens? That’s what you need to do. I would start by running a Panda report (http://www.hmtweb.com/marketing-blog/panda-report-top-landing-pages-google-organic/). That should get you moving in the right direction. I hope that helps!

Hi,

first of all, thanks for all your articles!

I’m owning an Onlineshop for Wine, well SEO optimized, getting good traffic threw long tail keywords, but since I running another project and the margin for this shop are quite low ( ~20% after I pay all my bills…) I’m thinking to change my site into an affiliate website.

I do have good ranking landing pages for each relevant keyword, with good content, not keyword stuffed :), and the idea is to present one this pages product which comes from affiliate instead of my product, but not directly with an affiliate link. Instead, each product will have it’s own page with a self made description (not coming from the affiliate) and on this product page, the user will find a link to my partner.

It means the followings:

-no affiliate links on the landing page

-each product has his page with unique content

-only one affiliate link / product page

-users will see 2 pages at least before going to my partner Website.

Is it the best way to do?

Thanks in advance for your help!

Thanks for your comment Julien. I would be very careful with how you approach the transition. I’ve had many affiliate marketers reach out to me after getting pummeled by Panda (since 2011). I’ve definitely helped several navigate through to recovery, but it’s not easy.

Without seeing your current site, the exact way you are going to transition, etc., it’s hard to provide any advice. But Google is absolutely going to pick up what’s happening, how that changes user engagement, user happiness, etc. Just my 2 cents. :)