The fall of 2016 was an extremely volatile time from a Google algorithm update standpoint. Actually, I believe it was the most volatile fall I have ever seen. We saw numerous updates from Google, including partial rollbacks. For example, we had Penguin 4 in late September and early October, and then massive volatility in November (with some rollbacks), which then rolled into December. It was a crazy few months for sure.

And since I heavily focus on algorithm updates, I felt like I was riding a Google coaster!

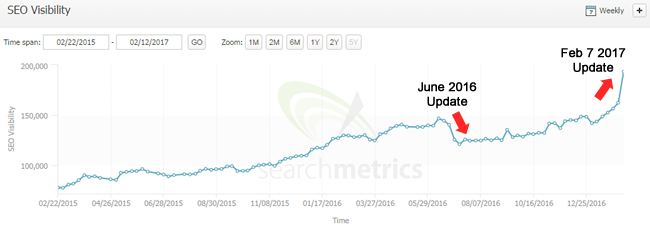

Once the updates calmed down (after the December 18, 2016 update), I knew the volatility could return in early 2017. And I was right. We saw an early January core update around 1/4/17 and then a strange update on 2/1 that seemed to target PBNs and links in general (which may yield a different post). But little did I know a major update was brewing. And that rolled out on February 7, 2017. And it was big. Barry Schwartz was the first to pick up chatter about the update and it wasn’t long before I started seeing major volatility.

Examples of Impact:

If you’ve read my posts about algorithm updates, then you know the deal already. But if this is your first time reading my algo update posts, then here’s a quick rundown. I have access to a lot of data from websites that have experienced quality problems in the past. That includes Panda, Phantom, and other core ranking updates. That enables me to see movement across sites, verticals, and countries when algorithm updates roll out.

In addition, a number of companies reach out to me for help after seeing negative impact (or to let me know about positive impact). So, I’m able to see fresh hits and recoveries as well. And then I dig into each vertical to find more impact. Between all of that, I get to see a lot of movement, especially with algorithm updates as large as the 2/7/17 update.

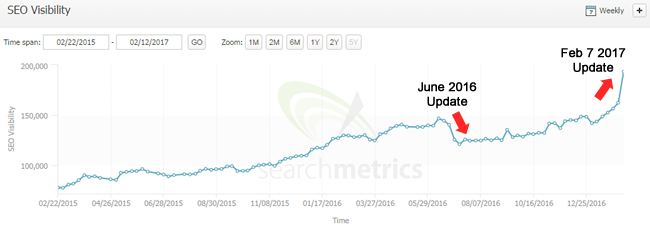

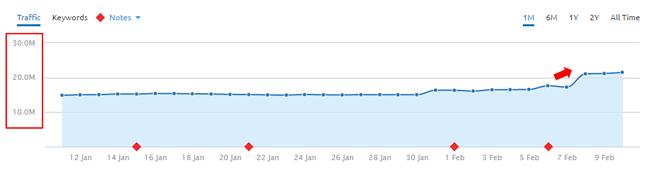

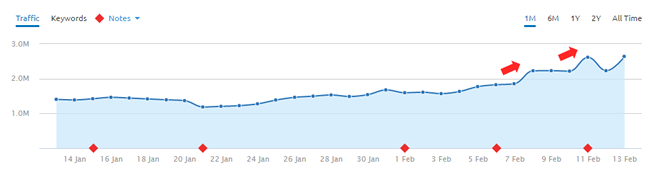

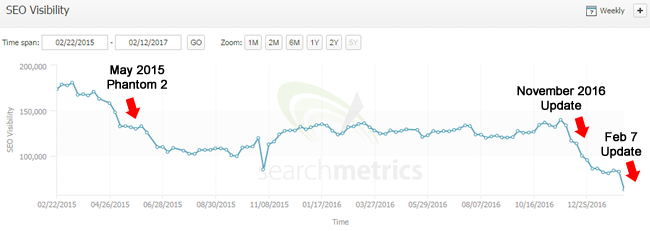

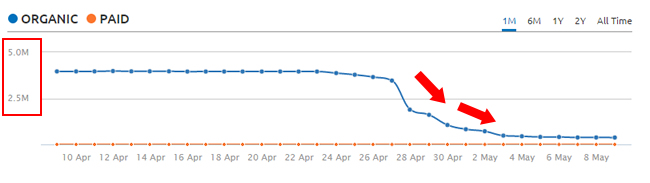

So what have I seen over the past week? Here are some screenshots of search visibility movement based on the February 7 update:

Positive impact:

Negative impact:

Big Rankings Swings

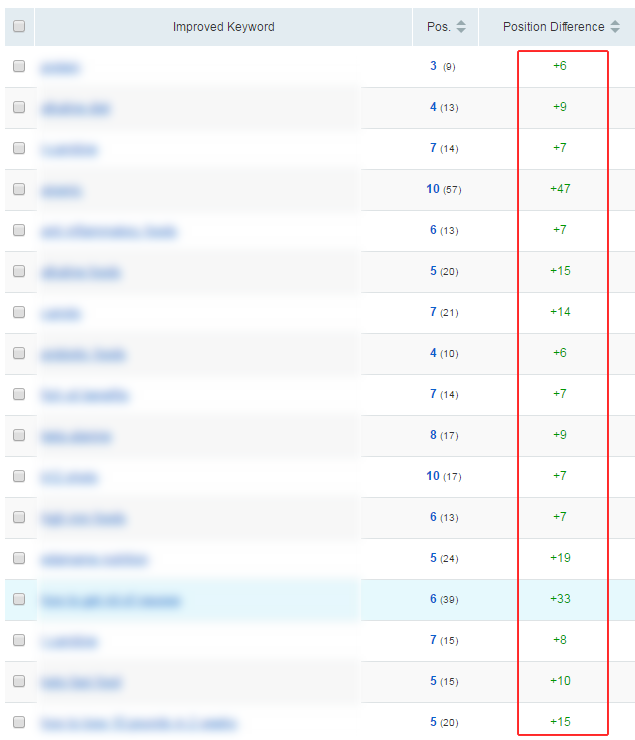

When an update like this hits, it’s amazing to see the rankings changes for sites impacted. You typically see big swings in rankings for many keywords. For example, jumping ten to twenty spots, or even more. Here are some screenshots of big rankings increases from sites impacted by the 2/7/ update:

Google’s Core Ranking Updates (and connections to previous updates):

In May of 2015 I uncovered a major algorithm update that I called Phantom 2. It was a core ranking update that Google ultimately confirmed. They said it was a change in how they assessed “quality”. It was a huge update, just like this one. For example, here’s a screenshot of a site that got hammered:

Since then, there have been numerous core ranking updates that seem to target very similar things. I’ll explain more about that below, but think low quality content, thin content, user experience barriers, ad deception, etc.

It seems the algorithm(s) that are part of the core ranking updates need to be refreshed, and when they do, all hell can break loose. It reminds me of old-school Panda updates. In addition, and this makes sense, you can see many connections to those previous core ranking updates with sites that have been impacted. That doesn’t mean you have to be impacted by a previous update to see movement, but sites that make changes (or fall out of the gray area) can see movement during subsequent core updates. I’ll cover that more below.

For example, here are a few screenshots showing the connection between previous major core ranking updates and the February 7 update:

The Connection to Google’s Quality Rater Guidelines

I presented at the Search Engine Journal summit this past fall (in the middle of all the crazy algo updates). In that presentation, I explained something I called “quality user engagement”. Since I have a lot of evidence of UX barriers and ad problems causing core ranking issues based on these updates, I used the phrase to explain them.

Basically, don’t just look at content quality. There’s more to it. Understand the barriers you are presenting to users and address those. For example, aggressive ad placement, deception for monetization purposes, broken UX elements, autoplay video and audio, aggressive popups and interstitials, and more.

And when referring to “quality user engagement”, I often point people to Google’s Quality Rater Guidelines (QRG). The QRG is packed with amazing information about how human raters should test, and then rate, pages and sites from a quality standpoint.

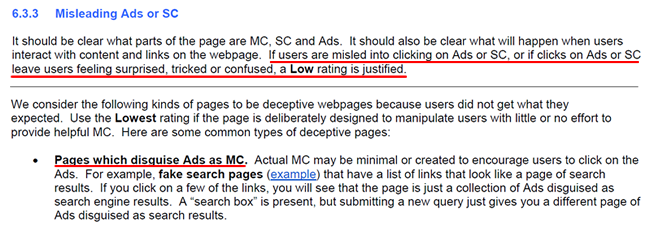

The amazing thing about the Quality Rater Guidelines is that there’s a serious connection to what I’m seeing in the field while analyzing sites getting hit by core ranking updates. For example, check out this quote below about ad deception. I can’t tell you how many times aggressive monetization leads to an algorithm hit.

So go read the QRG… it’s an eye-opening experience. And the other reason I’m bringing this up is because of an interesting tweet from Google’s Gary Illyes on Sunday (right after the algo update). As part of his “Did You Know” series of tweets, he explained that the QRG were updated and they contain great information right from Google. It was interesting timing, to say the least.

Now, the guidelines were updated in March of 2016, so it’s almost a year ago. So why tweet that now? It might just be a coincidence, but maybe it’s due to the major update on 2/7? Only Gary knows. Regardless, I highly recommend reading the QRG.

Here’s Gary’s tweet:

Negative Impact

Since 2/7, I’ve dug into many sites that experienced negative movement. And some saw significant drops across keywords. Basically, Google’s algos are saying the site isn’t as high quality as they thought, rankings should be adjusted, and it’s a site-wide adjustment. Sure, some keywords might still rank well, but many dropped in position, and some dropped significantly.

I can’t cover all of the factors I saw during my travels, or this post would be excessively long (and it’s already long!) But I’ll definitely cover several things I’ve seen during my travels. And don’t forget what I said above about the Quality Rater Guidelines. Go read that today if you haven’t already. It’s packed with great information that directly ties to many situations I’ve seen while analyzing sites impacted by core ranking updates.

Increase in Relevancy & The Connection To Google Panda

The first thing that jumped out at me was the noticeable increase in relevancy for many queries I was checking. For example, on sites that saw negative impact, I checked a number of queries where the site dropped significantly in rankings.

The relevancy of those search results had greatly increased. Actually, there were times I couldn’t believe the sites in question were ranking for those queries at one time (but they were prior to 2/7). Sure, the content was tangentially related to the query, but did not address the subject matter or questions directly.

And if you’ve been paying attention to Google Panda over the past few years, then your bamboo radar should be active. In January of 2016 we learned that Panda became part of Google’s core ranking algorithm. That was huge news, but we had seen major changes in Panda over time. We knew something was going on with or cute, black and white friend.

I saw big changes in Panda from 4.0 to 4.2, which led me to believe the algorithm was being refined, adjusted, etc. You can read my post about Panda 4.2 to learn more. Well, after the announcement about Panda being part of Google’s core ranking algorithm, Gary Illyes kept explaining Panda in an interesting way. I covered this in a post last year when covering the March 2016 Google algorithm update.

Gary explained that Google did not view Panda as a penalty. Instead, Panda looked to adjust rankings for sites that did not meet user expectations from a content standpoint. So Panda would adjust rankings for overly prominent sites. That’s a huge change from Panda of the past (where sites could drop by 65%+ overnight).

Here’s the video clip (check 7:47 in the video):

The reason I’m bringing this up is because I saw a lot of “relevancy movement” during my analysis of the 2/7 update. Now, if that was all I saw, I would say this was Panda at work. But that’s not all I saw. Instead, I saw a mix of problems causing drops, including low-quality user engagement. So if Panda was part of this update somehow, then the update combined Panda with other quality algorithms that were refreshed. Hard to say for sure, but I saw relevancy enough that I wanted to bring it up in this post.

Low-Quality User Engagement

Just like with other core ranking updates, low-quality user engagement reared its ugly head. I saw this often when checking sites that saw big drops. For example, broken user interfaces, menus that didn’t work (either on purpose or by mistake), extremely bulky and confusing navigation, ad deception and crazy ad placement (more on that soon), excessive pagination for monetization purposes, and other UX barriers.

This is consistent with previous core ranking updates dating back to Phantom 2 in May of 2015. To learn more about these issues, I recommend reading all of my previous posts about Google’s quality updates. I have covered many different situations in those posts. In addition, read the Quality Rater Guidelines. There is plenty of information about what should be rated “low quality”.

For example, I’ve seen sites forcing users through 38 pages of pagination to view an article. Yes, 38.

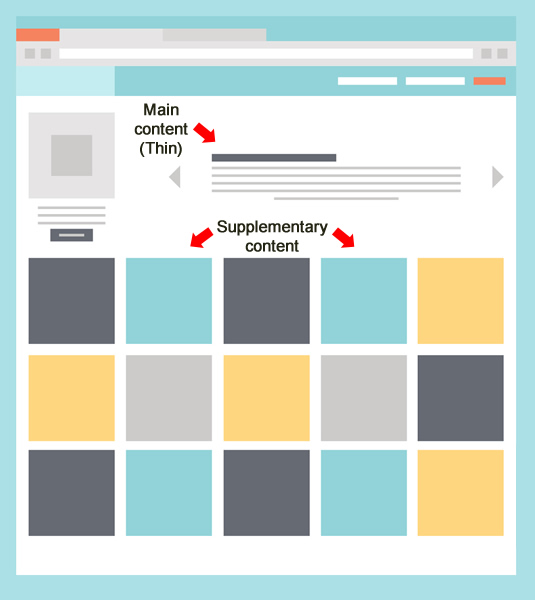

Thin content and Low-quality content

Similar to UX barriers, low quality content and thin content has been on my list of problems with regard to Google’s core ranking updates from the start. I often checked pages that dropped significantly and found problematic content.

The sites I checked were once ranking for keywords leading to that content, but either chose to keep thin content there, or they weren’t aware of the thin content (based on the size of their sites). It underscores the importance of continually analyzing your website, rooting out content problems, enhancing content where needed, removing UX barriers, etc. If you don’t do that, problems can slip in. And when those problems keep growing, you can face serious problems with Google’s core ranking updates.

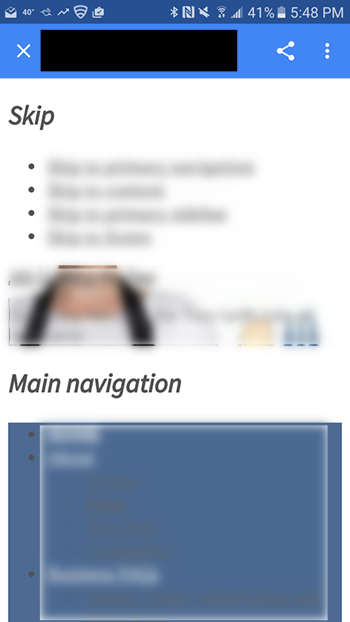

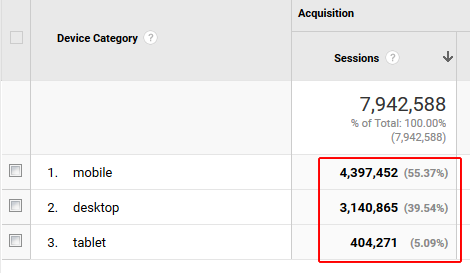

Mobile Usability Problems

I’ve seen this with previous core ranking updates as well. We know Google is testing its mobile-first index, but it hasn’t rolled out yet. But even before “mobile-first” was announced, I saw serious mobile problems on some sites that were negatively impacted by core ranking updates.

During my latest travels, I saw certain sites that were nearly unusable on mobile. They were ranking well for many queries, but when you hit the site on a mobile device, the menus didn’t work well, you could scroll horizontally, UX elements were out of place, etc.

And in case you’re wondering, this had nothing to do with Google’s mobile popup algorithm. I’ve been heavily tracking that update too. You can read more about my findings (or lack of findings) in my post and in my Search Engine Land column.

So make sure you check your site on various mobile devices to ensure users can do what they need to do. If they are running into problems, then that can send horrible signals to Google that they didn’t find what they wanted (or were having a hard time doing so). Beware.

An example of a mobile UX breaking:

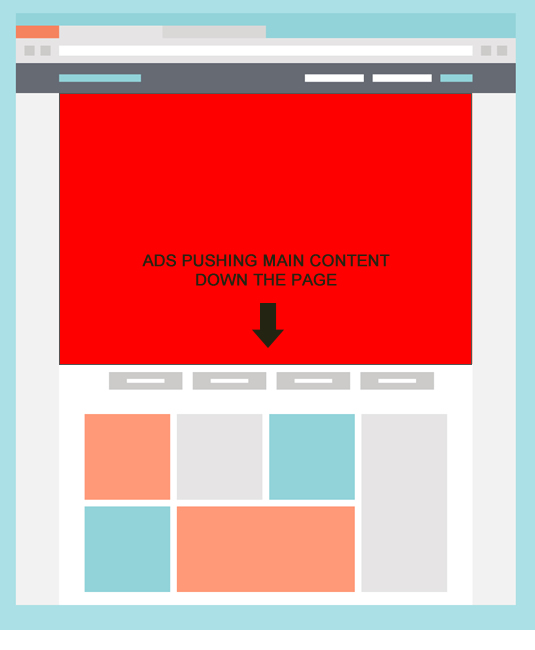

Ad Deception

Ah, we meet again my old friend. Just like with previous core ranking updates and old-school Panda updates, ad deception could cause big problems. For example, weaving ads into your content could drive users insane (especially when those ads match your own content styling-wise). They might mistakenly click those ads and be sent downstream to a third-party advertiser site when they thought they were staying on your own site.

Think about the horrible signals you could be sending Google by doing that to users. And add aggressive third party sites with malware and malicious downloads and you have a disastrous recipe. For example, shocking users as they get taken off your site, and then angering them even more as they get hit by malware. Not good.

By the way, ad deception is specifically mentioned in Google’s Quality Rater Guidelines. Don’t do this. You are playing Russian Roulette with your rankings. I’ve seen this a thousand times over the years (with an uptick since May of 2015). Again, beware.

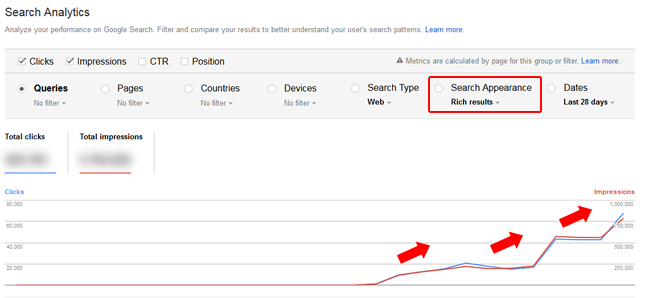

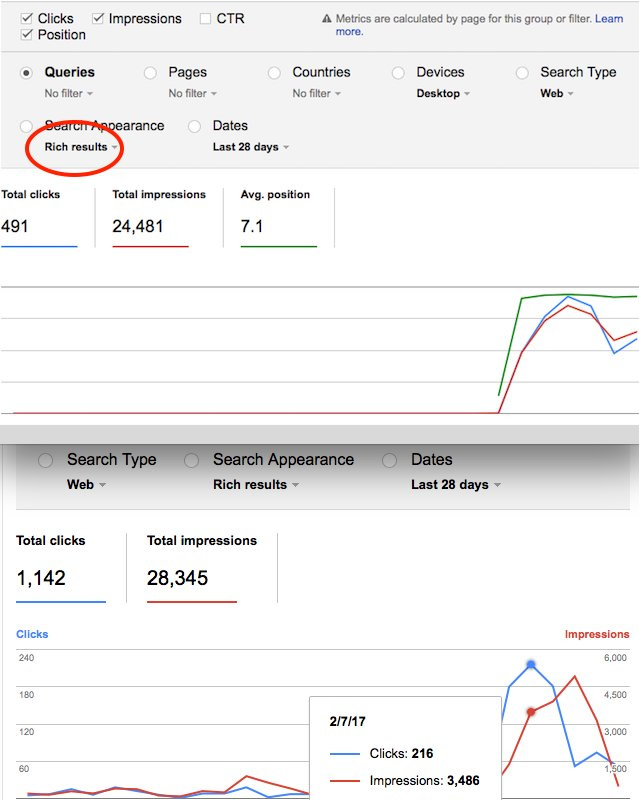

Side Note: Rich Snippets Algo Refreshed

This is not the first time that rich snippets have either appeared or disappeared for sites during core ranking updates. It’s pretty clear that Google’s rich snippets algo was refreshed either during, or right around, the 2/7 update. And since core ranking updates are focused on quality, that makes complete sense.

For example, there’s a quality threshold for receiving rich snippets. If your site falls below the threshold, then they can be removed. If it’s deemed “high quality”, then you can receive them. Well, I saw several examples of rich snippets either showing up or being removed during the 2/7 update. So if you’ve been impacted from a rich snippets standpoint, work hard to improve quality. They can definitely return, but it will take another core update and refresh of the rich snippets algo for that to happen. Based on what I’ve seen, that’s happening every few months.

And Jim Stewart reached out to me on Twitter about what he saw with rich snippets:

If you are interested in learning more about Google’s site-level quality signals and how they can impact rich snippets, then definitely read my post covering the topic. I provide a lot of information about how that works and provide examples of that happening during major algorithm updates.

Positive Movement on 2/7

So that’s a sampling of problems I saw on sites that were negatively impacted by the 2/7/17 update. But what about sites that improved? Here are some things that sites have done after getting hit by a core ranking update that experienced a recovery or partial recovery during subsequent updates:

Improved “Quality Indexation”:

I’ve brought up what I call “quality indexation” quite a bit in the past. That’s the idea that you only want your highest quality pages indexed. Don’t shoot for quantity… Instead, shoot for quality. When helping companies that have been negatively impacted by core ranking updates, it’s not unusual to find massive amounts of thin or low quality content on the site.

One of the first things I do is surface those urls and work with clients to understand their options. First, did you know this was indexed? If so, is there any way to enhance the content there? And if it’s too big of a job, then work on removing those urls from Google’s index. That last option is typically for larger-scale sites with hundreds of thousands, or millions of pages indexed.

Google’s quality algorithms will use the urls indexed to “score” the site. So if those low quality urls are either enhanced or removed, that can be a good thing. This was a factor I saw on several sites that surged during the 2/7 update.

Cut down on ad aggressiveness:

There are times companies reach out to me after getting hit and they have no idea why they were negatively impacted. They focus on their content-quality and links, but don’t take “quality user engagement” into account.

When analyzing negative hits, it’s not unusual to see urls with way too many ads, ads that push the content down on the page, or ads that are so annoying that’s it hard to focus on the primary content.

And then you have excessive pagination for monetization purposes, which fits into the same major category. For example, forcing users through 38 pages of pagination to read an article (as I mentioned earlier). And of course, you have ads in between every component page. Think about your users. How do they feel about that? And what signals does that send to Google about user happiness (or lack thereof)?

Improved UX on both desktop and mobile:

I mentioned mobile UX problems earlier on sites that were negatively impacted. Well, there are companies that have enhanced their mobile experience that saw gains. Now, I’m not saying that was the only change… since companies typically have to make substantial changes to quality overall in order to see positive movement. But it’s worth noting.

For example, moving to a responsive design, enhancing content on mobile urls, and creating a stronger navigation/internal linking structure for mobile. And with some of my clients having 60-70% of visits from mobile devices, it’s critically important to ensure those users are happy, can find what they need, and navigate through the site seamlessly.

And from a desktop standpoint, fixing usability barriers like broken elements, navigation, ad placement, etc. all contributed to a strong user experience. It wasn’t hard to surface these problems by the way. You just need to objectively traverse your site and rate the experience, identify pitfalls, aggressive site behavior, and broken UX components.

For example, how important do you think a mobile UX is when 55% of your traffic is from mobile devices?

Fixed technical SEO problems that could be causing quality problems:

I’ve always said that technical SEO problems could cause quality problems. For example, glitches that cause thin content (or no content) produced across a large-scale site. Or massive canonicalization problems impacting many pages across a site. Or meta robots issues, or robots.txt problems, that cause pages to disappear from the index (so they can’t help you quality-wise). So on and so forth.

Sites I’ve helped that saw gains also fixed problems like this along the way. It’s more about site health than specific quality problems, but it’s also worth noting. Don’t throw barriers in the way of users and Googlebot.

Again, I can’t cover all problems fixed or all areas addressed by sites that saw improvement, but I hope you get the gist. As Google’s John Mueller has said many times, you need to significantly improve quality across your site over the long-term in order to see positive movement from Google’s core ranking updates. It can take time to audit, time to make changes, and then you need to give Google time to evaluate those changes. And then you need the algos to be refreshed. That can take months, and typically does.

Here’s a video of Google’s John Mueller explaining that sites need to increase quality substantially in order to see impact (at 39:33 in the video):

Moving Forward:

I’m going to sound like a broken record here, but this is similar advice I have given for a long time when it comes to Google’s core ranking updates. Here we go:

- You must know your site inside and out. And you must objectively analyze your site to weed out low quality content, low quality user engagement, ad aggressiveness, over-monetization, UX barriers, etc.

- I recommend performing a crawl analysis and audit of your site to surface potential quality problems. And make sure you crawl the site as both Googlebot and Googlebot for Smartphones in order to understand both the desktop and mobile situation.

- Run a Panda report (which can be completed for any algo update or traffic loss) to understand the urls seeing the most volatility. Then dig in to those urls. You might just find serious issues with the content and/or user experience on urls that were receiving the most impressions and clicks PRIOR to the algorithm update.

- Move fast to make changes. The quicker you can surface problems and make changes, the more chance you have of recovery during the next major core ranking update. I don’t mean you should rush changes through (which can be flawed and cause more problems). But move fast to identify big quality problems, and then fix them quickly. Remember to check those changes in staging and then once they go live. Don’t cause bigger problems by rolling out flawed updates.

- Understand the time to recovery. As I’ve explained earlier, it can take months for sites to see recovery after getting hit by a core ranking update. So don’t have a knee-jerk reaction after just a few weeks after implementing changes. Keep the right changes in place for the long-term and keep increasing quality over time. I’ve seen sites roll out the right changes, and then reverse them before the next major algo update. You can drive yourself mad if you do that.

Summary – The February 7, 2017 Update Was Substantial

The 2/7/17 update was significant and many sites saw substantial movement (either up or down). If you’ve been negatively impacted by the update, go back through my post, and my other posts about Google’s core ranking updates, to better understand what could be causing problems. Then work hard to analyze your site objectively and weed out the problems. Maintain a long-term view for improving your site quality-wise. Don’t put band-aids on the situation. Make significant changes to your site to improve quality overall. That’s how you win. Good luck.

GG

One of my big news sites was hit around January 24-25, 2017. Dropped at least 30%. It was growing every month for the last two years and was never hit by the animals before.

Last week, I suspected it was the monetization. So I removed almost half the ads (even .js files that weren’t really ads, but were from known ad companies like Tynt).

Then over the last week, all the traffic came back. In my particular case, it was pretty simple and I feel like I lucked out!

That’s an interesting case, and great news for you! But that would be extremely fast from a recovery standpoint (from a major core ranking update like what I covered above). That leads me to think something else was going on with the ads you mentioned or how Tynt was implemented. Regardless, that’s great news for your site! Let me know if anything changes over the next few weeks.

did you have any ads above the fold?

Thanks Google i am back 1 year ago my site dropped at least 50% traffic but this update got full traffic back to track..

Hey, that’s great to hear. Recovery can definitely happen, but it typically takes a lot of hard work and then Google’s quality algos need to be refreshed. One year seems a little long, but better late than never! Again, congrats. :)

Very informative. Loved the minute details presented in the article. Thanks Glenn. This really helps.

Google recently announced the refreshes or updates would be released simultaneously across all countries. Somewhere I feel that’s still not the case. From the Indian sites that I track, there are hardly couple of sites that have seen a movement (up or down) due to this update. And probably some of those were due to search trend related to the niche.

Anyways, the article gives a lot of useful insights in terms of how to maintain the site hygiene and provide a lot of value to the users.

Thanks again.

Thanks Tejas. I’m glad you found my post valuable! And that’s an interesting observation. Most of the sites I’ve analyzed were in the US or in Europe. I’ll do some detective work with sites in India soon and let you know if I’m seeing any major movement based on the 2/7 update. Thanks again.

Hello,

I read ” And if it’s too big of a job, then work on removing those urls from Google’s index. ” What about news website ? A lot of content are thin and not visited after couple of month. What is the best solution ? Delete with 301 or other ? let it ?

Is that a problem to have hundred of 301 (even in host file).

Regards

Great question. So there’s a difference between thin and short. There are some shorter posts that can definitely be valuable. That’s the tricky part when helping companies weed out thin content. Thin *combines* short with content that’s not valuable.

If you do have content that’s truly thin, and you can’t enhance that content, then you can noindex it. Then the urls are there for users (if it needs to be for some reason), but it’s out of Google’s index (so it can’t hurt you quality-wise).

Regarding 301s, if you 301 any url to another that’s not equivalent or relevant, Google can see those as soft 404s. I wrote an entire post about that with a case study. So your options are probably noindex or 404s. Here’s my post -> https://www.gsqi.com/marketing-blog/redirects-less-relevant-pages-soft-404s/

But again, definitely make sure the content is thin, and not just short. There’s a lot of valuable content that’s shorter in length… I hope that helps.

In Italy i saw sites with page literally nuked from the index, in a penguinesque manner.

A site i’ve made for a friend a couple years ago, of an italian cat breeder, had its homepage NUKED: it isnt indexed anymore. Other pages are ranking as well, but it has lost the main keywords.

This site had received only spontaneous links, many from foreign countries, and had a good structure, maybe a little luckluster on the content, but nothing that, in my view as a professional, would foresee such a violent repraisal from Google.

Did you see anything like that?

Was that site hit by the 2/1 update or 2/7 update? They were very different. :) I might cover the 2/1 update soon, but need more time.

The one in February, which i think hit italian SERPs a couple days later

There were two updates in February. 2/1 and then 2/7, and they were very different updates. :) I might write about the 2/1 update if I have time.

I’m getting close to not worrying about Google any more with all the ups and downs. I honestly feel that my site doesn’t do any of the things mentioned here – I’ve always tried to balance advertising with visitor experience. Yet, it seems we were hit in November, on February 1st and 7th.

Also, despite their protestations, it certainly does seem to me that they throttle traffic at least for some sites.

In my niche, there are some 800 pound gorilla sites that get most of the SERP love, and then there is also the issue of now also having to compete with Google itself in SERPs with info boxes and the like.

Very, very frustrating.

Hey, I’m sorry to hear you were hit by multiple core ranking updates. Has anyone analyzed your site through the lens of those updates? I’ve never seen a site get hit by a core ranking update hard that was completely ok from a content and user experience perspective. Maybe there are problems sitting below the surface that are hard to pick up. I’ve seen that sometimes. Hard to say without digging in, though.

https://gamerant.com

I try to make the site as legit as possible. Thinking of going back to http from https. Not sure what the heck else.

When did you migrate to https? I’ve analyzed many sites impacted by Google’s core ranking updates and I’ve never seen significant impact from just migrating to https. There’s usually much more to it than that. That’s unless you had a botched migration (which is separate from the core ranking update).

You can definitely see volatility in the short-term with a migration to https, but that should calm down pretty quickly. Also, if you feel it is the https migration causing problems, then you can reach out to Gary Illyes or John Mueller on Twitter. They asked for examples of sites that were negatively impacted after migrating to https (again, not related to the core ranking update). I hope that helps.

Thanks, Glenn. It was months ago. One thing that crossed my mind regarding https is if Google might have issues when there are things on the page that are not secure. Usually this comes from ads on the page. See attached image – most of the pages I visit on my site have the bottom icon, not the top. https://uploads.disquscdn.com/images/21beedfa7e7849d6ce6acabc25cde6b273a19f04f0c2561344a8c323cb2c452e.jpg

According to Gary (the guy that wrote the script for that ranking ‘boost’), they only actually check the URL – and not the actual certificate to see if a site is secure or not. So you might be safe on that front.

Is your site GameRant.com? I had a fullscreen popup ad shoot up when I clicked a blog post. That perhaps can be part of the problem.

That’s because you had adblock enabled. Non-adblock visitors do not get that.

Doug, FYI, even though I seriously doubt that was an issue, I’ve gone ahead and removed that unit.

I agree – honestly I think Google is full of crap and I was ecstatic when I switched my ads to adthrive (although now wondering if that could be source of traffic drop lol) so I was no longer relying on them for ads.

Still rely on them heavily for traffic though (85% of traffic from search) so I feel like I have to play this damn game. Will say though that I’m glad I focused more on e-mail over last few months since list has doubled since I started using all the serious opt in tools :)

Hello Glenn, could you please tell me the name of the keyword ranking tracker tool in your post? Thanks very much!

Sure, there are screenshots from both SEMRush and Searchmetrics in my post.

I’m sorry to hear you were negatively impacted by the 2/7 update. It was a huge update and many sites were impacted. The update has more to do with content, user experience, etc. than unnatural links. It’s similar to other major core ranking updates (which I’ve covered on my blog extensively).

Also, I saw a lot of relevancy adjustments while analyzing sites impacted by this update. And then I saw major user experience problems (both desktop and mobile). For the keywords you used to rank #1 for, but now don’t, is the content directly addressing what users would want to know? Also, have you analyzed your site through the lens of major core ranking updates like this (looking to uncover content problems, tech SEO problems, UX barriers, etc?)

Hi Glenn,

After reading your article, one thing on the site I caught is we have a affiliate link above the fold and is a big image (their logo) for each review.

We have removed this today and may remove our “More Details” button as well as we have other CTA on the page. Google may see this as an ad even though it’s not a natural ad image size by any means.

Though of course this design has been like this for years on our site without any issue.

Been all about user experience and focused always on that. Though for the nature of our site bounce rate has been always higher than I would like.

The reviews are detailed, list competing products, gives pros/cons, allow for comments, rating, what we recommend ,etc.

Will revisit Google guidelines against to compare what we have on the site. Is there one newer than March 2016??

Hey, great to hear you are digging in and making changes. Just be aware that it takes time to see recovery from core ranking updates (typically months). And you usually need to improve quality substantially. But again, great you are starting to make changes.

Regarding the Quality Rater Guidelines, March 2016 is the latest version. But based on Gary’s tweet this week, maybe a new one is coming. :)

Hi Glenn,

One of the website I’m working was hit on 7/2/17. The website was hit by Panda Update earlier in 2014. Client did changed the content back then to recover from Panda. So not sure, what could be the reason for hit this time?

Any steps, you would suggest I should go through?

Thank you in Advance

Boni

Hi Boni. It’s really hard for me to say without analyzing the site. But since it had been hit by Panda in the past, it’s clearly in the gray area quality-wise. For example, maybe changing enough to recover at some point, but possibly sitting right on the border. Then a core ranking update rolls out and the site gets hit again.

I would review all of my posts about Google’s core ranking updates and heavily analyze the site through the lens of those updates. Then make substantial changes (like John Mueller explained). Also, there’s typically not one smoking gun, but instead, a battery of smoking guns. :) I hope that helps.

Thanks Glenn.

Glenn, after reading your previous posts, I realized that the client was earlier hit by Phanton update in 2013. [Basically hit in 2013 & Now in 2017]. Will keep you posted. :)

Great detailed content Glenn, I learned a lot :) One question…My ranking doesn’t seem to have moved much but my traffic has dropped dramatically in the last week – any ideas?

Cheers

That’s interesting! You might want to dive deep into your Google Webmaster Account & identify the search queries which saw decrease in search impressions. May be that will help?

Is there seasonality that could be impacting traffic? Also, I mentioned running a Panda report in my post (which can be used for any algo update or traffic drop). I recommend running that to check the landing pages that dropped since the update (and the associated queries). That might provide some clues as to why that’s happening.

There’s no connections to link profile?

As per Glenn’s Analysis – mostly no. It’s more about Panda and Phantom

Boni is right. The update was focused on content quality, low-quality user engagement, and other factors I mentioned in my post (and previous posts about Google’s quality updates).

For few websites which droped after Google algo update we cleaned everything which may cause problems as adsense, outgoing links, ads and we left clean text only – we changed theme and after few months (from november) it’s still not recovered. So that’s why I asked about links because only thing we can’t improve is user engagement (there’s no traffic so we can’t improve it). Maybe we need to wait for some refresh or we got some flag as a not trusty website..

Google is surely so dynamic with their constant updates that shocks everybody out. Thanks for sharing these Glenn! I wonder when will be the next update and how will it change the algorithm AGAIN.

Thanks Emmerey! Major core ranking updates like this typically roll out every few months. That said, there’s not a set timeframe, so it can happen at any point. :)

You’re right. I guess all we can do is prepare by doing the right steps. Google doesn’t penalize if there isn’t anything to penalize to begin with.

Best article about the Feb 7 update, thanks for share

Hey, thank you! I’m glad my post was helpful. It was a huge update.

Look at my site, it’s perfect https://goo.gl/cREgwz

Never bought links, AdSense + 2 other banners only, quality content, always grew up until 7th February. Lost 50% visitors since 7th February, and still losing. It’s been completely destroyed.

I’m sorry to hear you were negatively impacted by the 2/7 update! It was a huge update that impacted many websites globally. But, I’ve never analyzed a site that saw significant impact that didn’t have something wrong from a content, UX, or advertising standpoint. I would read all of my posts about Google’s core ranking updates and then perform a crawl analysis and audit. Also, there typically isn’t one smoking gun… Instead, it’s usually a battery of smoking guns. I hope that helps.

The date of the algorithm change corresponds with a change made to image search in Germany and France (see below), and whilst there is clearly something much bigger going on, the timing is interesting. I wonder if there were other aspects (not related to image search but rather to web results), and impacting either the search engine UX, or the algorithm, that Google changed as part of the same clutch of updates, which have led to the shifts users are seeing.

https://support.google.com/webmasters/answer/6211453#search_analytics

February 7 – (Image search for Germany and France only)

The design and behavior of Google image search results was standardized to match the design and behavior in all other locations. As a result, there may be an adjustment in click counts for image search result data for searches from Germany and France.

Hello Glenn, thanks for this detailed article!

I work now to create a separate mobile version for my site. 60% of the pages have separate mobile url’s and 40% are still in work and present the desktop version to users. Since Feb 7th i see significant drop in traffic only on my mobile site mainly on HP (although HP has separate mobile version). On the other hand I see that my ranking didn’t change. What you suggest to check in that case?

I’m sorry to hear you saw negative impact. When an update this big rolls out, many sites can see movement. I recommend reading all of my posts about Google’s core ranking updates and then perform a crawl analysis and audit through the lens of those updates. And as I mentioned in my post(s), it’s typically not one smoking gun. There’s usually a battery of smoking guns. I hope that helps.

Glenn- Have you seen any sites that saw big traffic / ranking declines after Feb 15 – rolling out over the weekend?

Ours followed that timing. Was hit by Nov 2015, then recovered with June 2016, then some more targeted hit in Dec 2016.

But trying to figure out if it was the same update that’s impacting the Feb 7 reports here, or a more traditional filter like Panda or Penguin.

Definitely best post I’ve read on this so far. Two of my sites saw 30% drops (same niche both with high traffic) starting around 2/6-2/7. I have a few things to fix/clean-up but nothing glaring.

It really seems strange that for such high quality sites (one of my sites literally has thousands of quality back links from NYT, Forbes, NPR, etc since we are a media expert in our niche), you can see such a big drop.

Great insights, but I kind of agree with what Game Rant said.

Whilst Google seems to be continuously trying to improve things in the SERPs, help whitehat sites e.t.c they are letting huge media conglomerates get away with shit that people were doing 10 years ago…site wide footer links, leveraging their huge portfolio of high authority sites to instantly take a new site to millions of visitors per month.

Whilst I appreciate every business should be able to leverage what they own, there has to be a limit, this needs to be addressed otherwise us little guys have no chance at all.

Also, I wouldn’t even mind so much if the content on these big sites was useful, engaging content, but instead it’s that crappy 36 pages for one article type of content with very little information that’s actually helpful…yes I am talking about BestProducts.com and all the others that Hearst, Purch and NYTimes have been acquiring over the past 12 months or so.

It’s a joke, it honestly is…

Excellent article (as always).

Do you think that keyword density was also a factor in the last updates? I didn’t see it mentioned here.

One of my clients had a drop in rankings that was so serious I couldn’t believe it. We launched their new redesigned website on the 6th – they went from #1 rankings for major keywords to completely off the first 10 pages of Google. Like…. 99 rankings down. We did everything right with the site launch…. seriously bad timing. This must have something to do with it.

Would be interesting to watch how the rich snippet pans out

Mostly positive movement around Feb 7, but the couple that dipped had menus that were not set up correctly by our clients.